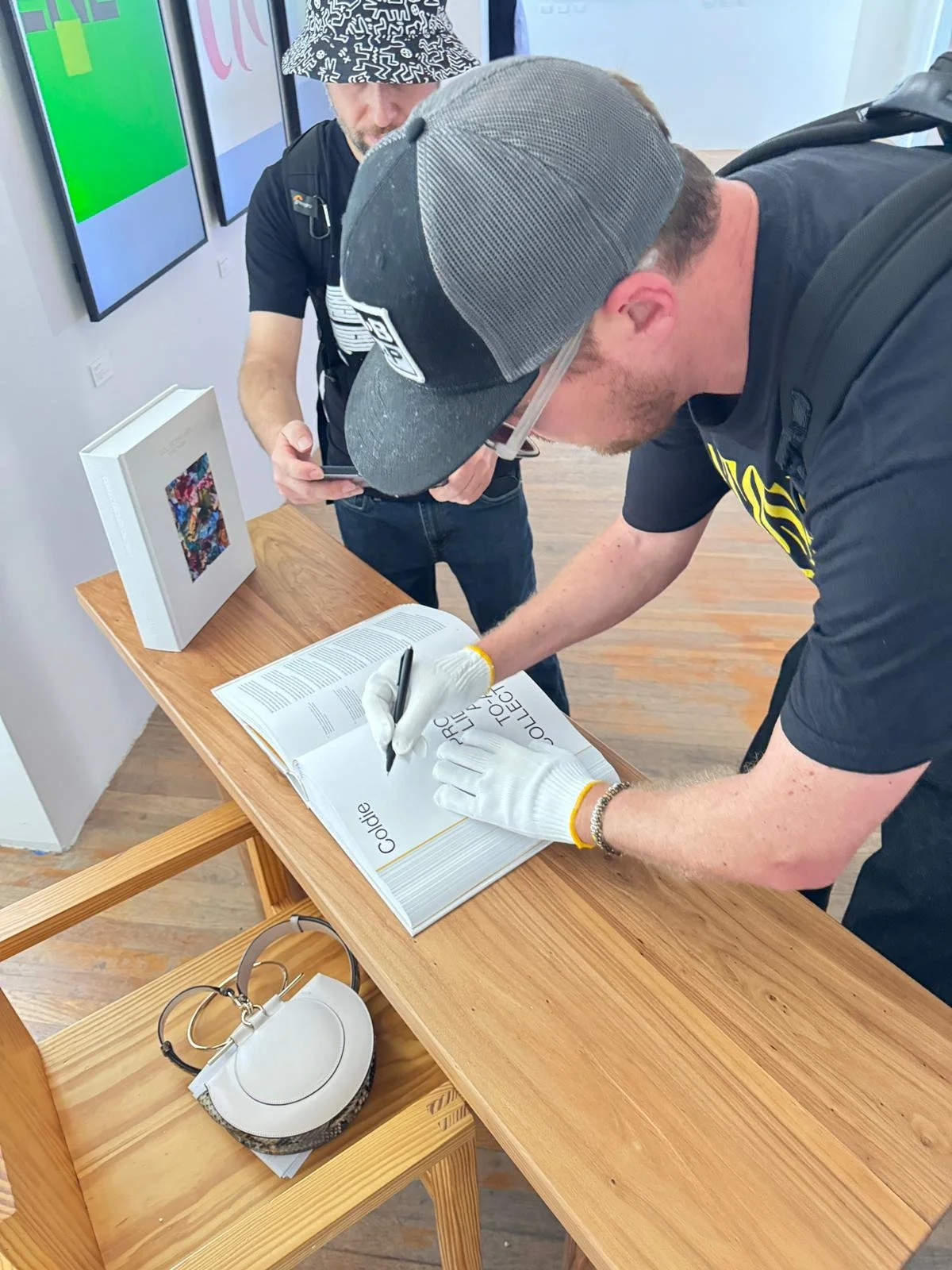

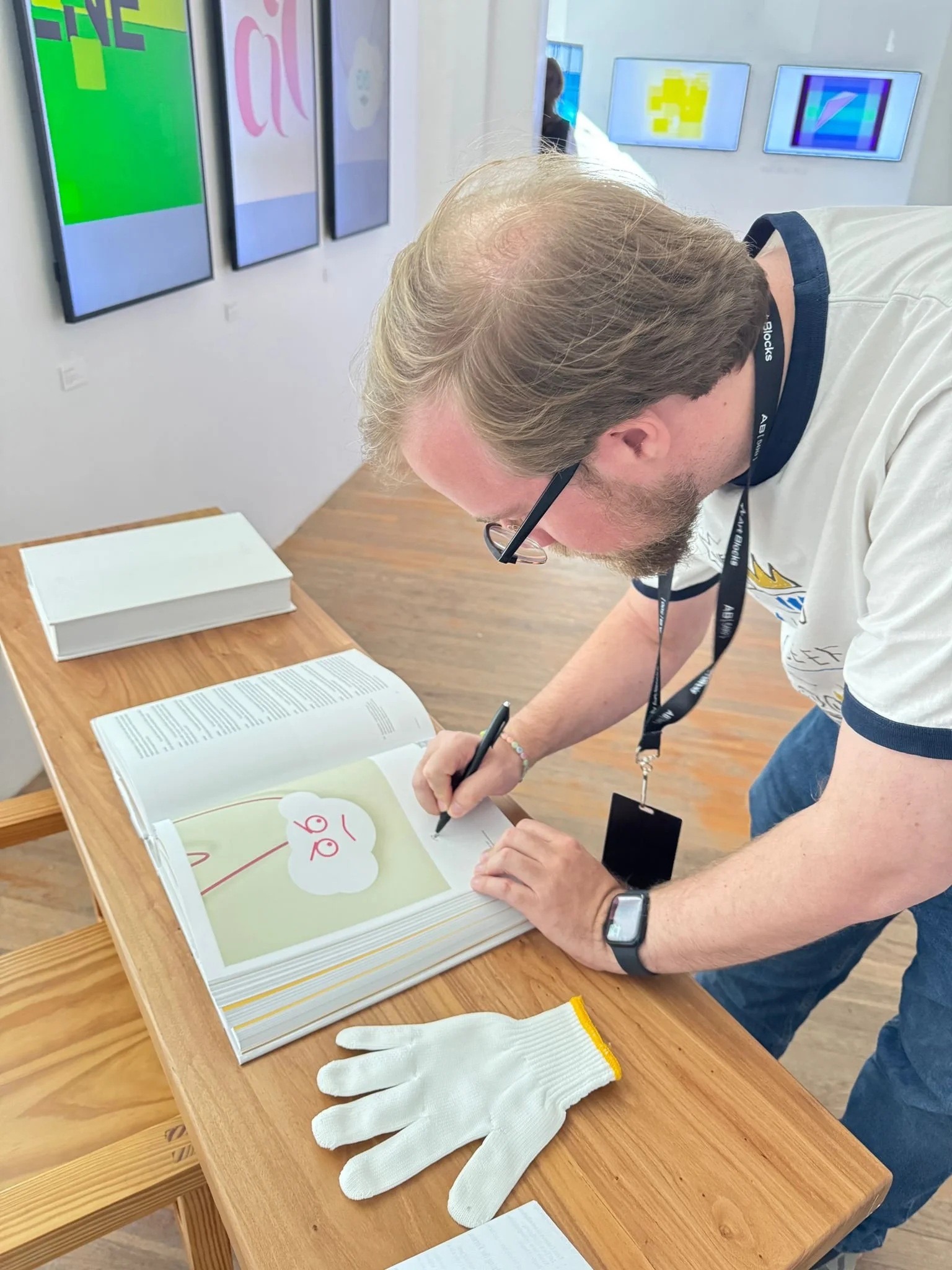

The Interview I Art Collector thefunnyguys

As part of my ongoing project Collecting Art Onchain - an initiative dedicated to documenting and preserving blockchain-native culture through conversations with early collectors, I sat down with thefunnyguys (TFG), an early generative art collector and the co-founder of Le Random and Raster.art.

thefunnyguys belongs to a generation of collectors who entered the space through experimentation with crypto, NBA Top Shot, pure curiosity and gradually moved toward generative art not as a trend, but as a conviction.

His trajectory mirrors the maturation of the field itself: from personal acquisition to institutional framing, from participation to infrastructure-building. Through Le Random, he has helped shape one of the most historically conscious generative art collections operating today, spanning pioneers of the 1960s to contemporary on-chain artists. Through Raster, he addresses one of the most pressing structural challenges in digital art: fragmentation and cross-chain legibility.

What emerges in this conversation is not a collector chasing legacy, but someone responding to gaps in context, in infrastructure, in continuity. His path reflects a broader shift within the digital art ecosystem: from acceleration to consolidation, from speculation to stewardship.

Before we sat down for this conversation, thefunnyguys asked me a simple but revealing question: why did I call the book Collecting Art Onchain - and not digital art, or contemporary art or else?

It felt like the right place to begin.

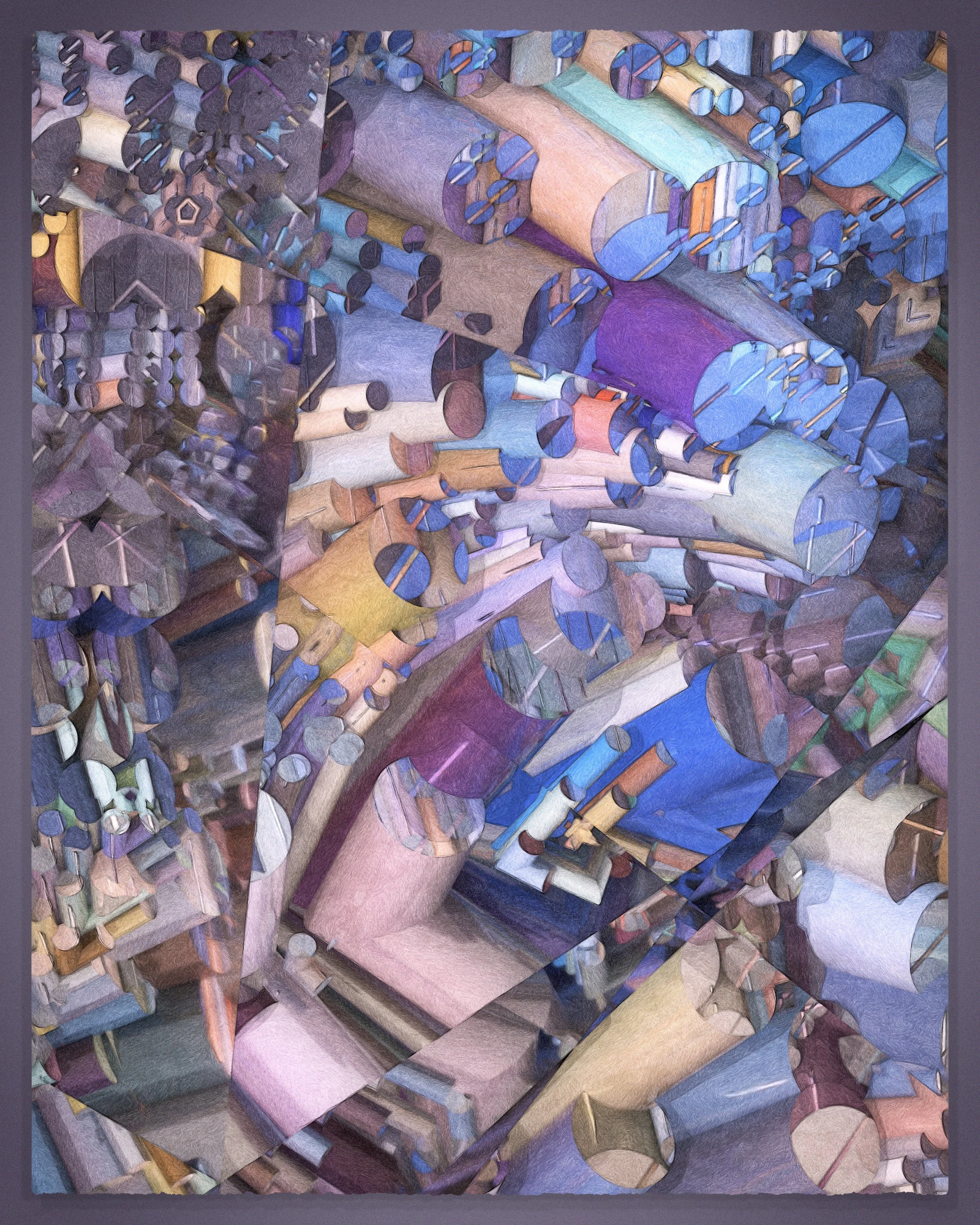

Manoloide, plasma004, 2021. Courtesy of thefunnyguys Collection and the artist.

TFG: Why does Collecting Art Onchain focus specifically on blockchain-native art rather than digital art more broadly?

KV: For me, the answer is quite precise. The moment you say “digital art,” the scope explodes: video, net art, software art, decades of pre-blockchain history. It becomes impossible to speak meaningfully about one thing without addressing everything.

What interested me instead was something much narrower - and paradoxically much more radical: the decade in which blockchain introduced digital scarcity, decentralised ownership, and a new social layer around art.

That small signal - which only a handful of collectors picked up early - didn’t just produce artworks. It produced an entirely new ecosystem. A parallel art world: unfinished, messy, still forming, but undeniably real.

But lets focus on you now, how did you engage with the space?

TFG: You entered this space very early and have been part of it longer than most - your curatorial work, and exhibitions that many of us still return to as reference points. Having spoken with so many collectors over the years, I’m curious: when you look at collectors today, what do you think actually connects them?

KV: Curiosity. That’s really the only common denominator.

People often try to identify patterns - background, wealth, geography - but none of that holds or matters. What does hold is curiosity. Early on, this space wasn’t capital-intensive; it was time-intensive. You had to learn how wallets worked, how platforms worked, spend hours in Discords [1], follow conversations that made no sense yet.

If you weren’t genuinely curious, you simply wouldn’t stay.

Osinachi, The Embrace, 2025. Courtesy of thefunnyguys Collection and the artist.

TFG: For me, it started with experimentation.

I was already in crypto around 2018, during the ICO period [2], so I was familiar with blockchains, decentralisation, and the idea of digital ownership. But with fungible tokens, it never really clicked. A lot of it didn’t make much sense to me at the time - and looking back, much of it probably didn’t make sense to anyone.

KV: You grew up around art though - traditional art, physical collections. Did that shape how you approached collecting digital work later?

TFG: Very much, but indirectly.

On my mother’s side, art was everywhere. My grandfather collected African and Japanese sculptures, Dutch paintings. The house was full of objects with presence. My mother took us to museums constantly. She still has this childlike awe when she looks at art - and that stayed with me.

At the same time, I never thought I’d work with art. If anything, I imagined that maybe one day, much later, I’d slow down and collect seriously. Instead, everything happened in reverse. I started collecting digital art while I was still at university, then founded Le Random [3], and only later built what looks like a “normal” company.

None of it was planned.

Sofia Crespo, soft_colonies_1898, 2022. Courtesy of thefunnyguys Collection and the artist.

KV: Your first encounter with NFTs wasn’t art - it was NBA Top Shot [4], why?

TFG: Yes. I had been in crypto since the 2018 ICO cycle, but fungible tokens never really clicked emotionally. NBA Top Shot did.

Suddenly, ownership made intuitive sense. A digital object you could own, trade, share globally - always in perfect condition. I’m not a basketball fan, but the logic was undeniable. And being early helped. Most of those were eventually sold, and that capital became the fuel for everything that followed, including the art collection.

KV: You began collecting with your two brothers. How did that evolve?

TFG: At the time, I was collecting together with my two brothers. I was the one who initially discovered NFTs and pulled them in. We explored Top Shot together - deciding which moments to collect, which players mattered. Being three people also increased our chances of getting into early drops.

We were very early. When NBA Top Shot later exploded in early 2021, some of the moments we had collected for relatively little suddenly became very valuable.

But by the end of 2021, the paths diverged. My brothers are doctors - busy lives, different priorities. I became increasingly focused, almost exclusively, on generative art. Eventually, I was the only one still collecting.

Michael Kozlowski, Tectonics #014, 2022. Courtesy of thefunnyguys Collection and the artist.

KV: From there, you moved into generative art - specifically your first Art Blocks [5] mint.

TFG: That moment changed everything.

I didn’t know what Art Blocks was. I didn’t know who Dmitri Cherniak [6] was. I just saw an announcement about Ringers, minted a few works, and watched the outputs appear. For the first time, art and blockchain were inseparable. The algorithm lived on-chain. The output was derived from the transaction hash. The artwork was literally born from the network.

Compared to projects where someone photographed a painting and sold the image as an NFT, this felt ontologically different. Fully self-contained. Honest.

That was the turning point.

William Mapan, Anticyclone #303, 2022. Courtesy of thefunnyguys Collection and the artist.

KV: Five years later, you’ve collected over 6,000 works. Do you still collect instinctively - or has the knowledge and experience you gathered over time changed how you decide?

TFG: Both, and that tension is important.

Early on, collecting was emotional and exploratory. Now I have filters. Experience helps you see through marketing narratives - “firsts” that aren’t really firsts. But I’m very conscious of not letting knowledge kill joy. I don’t want my collecting style to be only rational.

Art collecting shouldn’t become a checklist. I still want that moment of falling in love with a work - otherwise it turns into asset management, not collecting.

Anna Ridler, Truth to Nature Flower II, 2022. Courtesy of thefunnyguys Collection and the artist.

KV: Is there a work in your collection you would never part with, regardless of price, or do you believe that, ultimately, everything is for sale under the right conditions?

TFG: Under the right conditions, everything is for sale. But some works would take an astronomical number for me to part ways with them. My CryptoPunk by Larva Labs and baba and plasma004 by Manolo are good examples.

RJ, Self Care, 2025. Courtesy of thefunnyguys Collection and the artist.

KV: What is currently on your screen or hanging on your wall where you live? Is there anything from your generative art collection that you live with day to day?

TFG: Most of my physical artworks are safely stored at my parents' house, as I move around a lot. I currently have the pleasure of living with artworks by Qubibi [7], Travess Smalley [8] and Matt DesLauriers [9], and I use Feral File's FF1 Art Computer [10] to display software works such as 36 Points by Sage Jenson [11] (from Le Random's collection) on my television.

Travess Smalley, 11_05_22_Pixel_Rug_01_TFG_100, 2022. Courtesy of thefunnyguys Collection and the artist.

KV: That distinction becomes sharper when you collect privately and institutionally through Le Random. It’s inevitable that you have to be more rational when you collect on behalf of the institution, no?

TFG: Exactly. My private collection can wander. Le Random cannot.

KV: When did you realize there was a need for a platform like Le Random?

TFG: Le Random exists because I was frustrated. Great generative works were being lumped together with Metaverse Land [12] and profile-picture projects, with no context. It felt like a disservice. Generative art has a lineage from the 1960s onward and needed a collection with a thesis, not a shopping cart.

That’s why Le Random isn’t just about ownership. It’s about research, writing, timelines, education. The collection isn’t meant to be traded - it’s meant to exist.

Qubibi, mimizu Untitled #26, 2023. Courtesy of thefunnyguys Collection and the artist.

KV: You structured Le Random around three generations of generative art.

TFG: Yes.

1965–1989: pioneers, pre-internet.

1990–2015: the internet era.

2016–now: the on-chain era.

Even though everything we collect is on-chain, we still use that framework to ensure continuity. It also means actively onboarding pioneers - encouraging them to release work on-chain, rather than rewriting history as if everything began in 2021.

KV: You’ve expressed a strong interest in the history and pioneers of generative art. Who was the first pioneering generative artist whose work you collected, and are there specific pioneers you especially value?

TFG: I believe Frieder Nake [13]'s Polygonzug from 1965 is the first generative artwork by a true pioneer that I collected. Peter Beyls [14] is a personal favourite as I have had the pleasure of visiting his studio numerous times and I helped him mint his first on-chain software work. Having a close relationship with a pioneer from my home country of Belgium feels truly special. I hope to collect the work of Roman Verostko [15], Jean-Pierre Hebert [16] and Hiroshi Kawano [17] at some point. In my opinion, the sensitivity of Verostko and Hebert's work is unparalleled among pioneers, and Kawano is such a special character in this movement, coming from a philosophy background and creating early digital designs in Tokyo as early as 1964.

Zach Lieberman, 100 Sunsets #40, 2022. Courtesy of thefunnyguys Collection and the artist.

KV: We briefly touched on Le Random commissioning artists - including pioneers such as Vera Molnár - to mint work on-chain. How do you think about collecting and preserving the work of pioneers who have already passed away and are no longer able to mint or create new, commissioned works themselves?

TFG: In that case, I believe no on-chain works should be released. It's a complicated topic, but I'm generally not a fan of artist foundations retroactively minting works after the pioneer has passed away. If the artist has passed away, their physical works should be collected and preserved similarly to traditional physical art I think.

(We haven't commissioned Molnar, by the way. Beyls, Giloth [18] and Cosic [19] are good examples where we did)

KV: Related to that: what is your perspective on post-minted or retroactively minted works - for example, AI or generative works that are later put on-chain to establish provenance? Do you think they hold the same value, or is something fundamentally different?

TFG: If the artist is alive and the physical equivalents haven't been offered on the market, I think it can make sense. When both the digital and physical are offered on the market, I find it rather confusing. Does this mean the work is an edition, even if both the physical and digital are unique artworks? Which one should carry the most value? Was the work intended to exist in both formats? ... In general, I prefer collecting artworks that only exist in digital format and that have certain characteristics that make the digital space a natural or even necessary home for the artwork.

Kim Asendorf, monogrid a5, 2021. Courtesy of thefunnyguys Collection and the artist.

KV: What have been the most challenging aspects of your collecting journey - storage, preservation, context, or something else entirely?

TFG: Preservation has become the biggest concern over time, not in theory, but very concretely.

In recent years, we’ve seen multiple platforms shut down or effectively go dormant. Even when a platform disappears quietly, it exposes structural weaknesses: artworks stored on centralised servers, metadata that can’t be migrated, media that becomes inaccessible even though the token still exists.

Emily Xie, Memories of Qilin #595, 2022. Courtesy of thefunnyguys Collection and the artist.

KV: Can you tell me more about Raster.art [20]? And again, your motivation?

TFG: Raster came out of frustration as a collector.

Digital art doesn’t live on one chain. It doesn’t live on one platform. Artists release work on Ethereum, Tezos, Solana, Bitcoin - often across multiple wallets, sometimes over many years. Yet most platforms force you into a fragmented view of that practice.

OpenSea [21], in particular, became increasingly difficult to use from a digital art perspective. In 2021, the experience was actually better than it is today. Over time, the interface prioritised volume and liquidity over legibility and context. For someone trying to understand an artist’s full body of work, it became almost unusable.

Raster was built to solve that fragmentation. The goal is simple: make it possible to see all of an artist’s on-chain work across chains, platforms, and wallets in one coherent place.

We don’t mint new works. We index existing ones. We back up media. We focus on legibility, context, and continuity. It’s about reducing friction - both for experienced collectors and for newcomers who would otherwise face an impossibly steep learning curve.

yuri, Go Players, 2025. Courtesy of thefunnyguys Collection and the artist.

KV: How do you think about risk?

TFG: It worries me - and it should worry everyone.

Centralised storage is fragile. When platforms disappear, tokens break. With Raster, we started by solving a simpler problem - fragmentation. Artists working across chains, across wallets, across platforms. No coherent view.

But preservation naturally follows. We back up media, index metadata, prepare for migration if needed. If a chain fails, it won’t be solved by one company - it’ll require community consensus. But infrastructure has to anticipate that possibility.

KV: When people talk about value in Web3, they default to price. How do you define value?

TFG: Value is composite.

Aesthetic value. Conceptual value. Historical position. Cultural relevance. Community resonance.

What’s different in Web3 is that value formation started bottom-up. Communities rejected imported hierarchies. Over time, though, we’re seeing convergence. Some traditional structures exist for a reason.

The challenge is keeping grassroots experimentation alive while allowing institutions to mature.

Iskra Velitchkova, CASTAWAY, 2025. Courtesy of thefunnyguys Collection and the artist.

KV: So Web3 shouldn’t reject tradition but shouldn’t replicate it blindly either.

TFG: Exactly. Ethereum will probably host high-value, high-profile sales. Tezos and others will remain experimental, weird, artist-led. That plurality is healthy.

KV: Do you think about legacy?

TFG: Honestly? Not yet.

Right now, I’m more interested in contributing meaningfully now. Legacy might emerge later, as a by-product. But if I start collecting for legacy, I think something essential would be lost.

KV: That’s a bit strange to hear, because as we speak, you seem to be moving very intuitively toward building a legacy - both for your collection and for Le Random (smiles)

TFG: I understand why it looks that way from the outside. And maybe, structurally, that’s true.

But for me, it doesn’t feel like legacy-building. It feels more like responding to what’s missing in front of me. I collect because I care. I build things like Le Random or Raster because I’m frustrated, because something feels incomplete, broken, or unfair to the work itself. What motivates me is being useful in the present moment - contributing to something while it’s still forming, while it’s fragile.

Maybe what looks like legacy from the outside is really just a series of decisions driven by a refusal to accept the current state of things as “good enough.”

seohyo, redraw (Self-Portrait with Bandaged..).date(210714), 2021. Courtesy of thefunnyguys Collection and the artist.

KV: How do you see the digital art market evolving over the next ten years, and what can collectors and platforms contribute to a more resilient infrastructure?

TFG: I think we’re moving toward convergence.

Early on, Web3 value formation was very bottom-up. Communities actively rejected external validation from traditional art institutions. That reaction made sense - especially given how extractive some early entries into the space were.

Over time, though, we’re seeing certain traditional structures re-emerge because they serve real functions: galleries, curators, long-term collectors, contextual frameworks. The challenge is integrating those without losing what made this space different in the first place.

Collectors can contribute by thinking beyond acquisition - by supporting context, preservation, and continuity. Platforms can contribute by prioritising infrastructure over hype: better indexing, better storage practices, better migration paths.

If digital art is going to matter in fifty years, the work needs to remain accessible, legible, and situated within its history. Building that resilience is less visible than market cycles, but far more important.

KV: Last question. Advice for new collectors?

TFG: Start. Don’t over-intellectualise.

Collect something small. Feel what ownership does to your relationship with the work. Spend time with it. Action produces understanding faster than theory ever will.

And protect your curiosity - that’s the most valuable asset you’ll ever have.

KV: How would you describe your collection in three words?

TFG: On-chain generative art.

On X: @thefunnyguysNFT

@thefunnyguysNFT’s collection link: https://opensea.io/thefunnyguys_vault

Vocabulary

[1] Discord - A chat platform widely used by NFT communities for discussion, drops, and artist updates.

[2] ICO period - Refers to the 2017–2018 boom in Initial Coin Offerings (ICOs), when many crypto projects raised funds by selling newly issued fungible tokens (often ERC-20 tokens on Ethereum) directly to early supporters. The era helped popularize wallets, token ownership, and on-chain participation before NFTs became mainstream.

[3] Le Random - A digital generative art institution co-founded by thefunnyguys and Zack Taylor. It collects, contextualizes, and elevates on-chain generative art through a historically spanning, chain-agnostic collection and an editorial platform that situates the medium within art history.

[4] NBA Top Shot - An officially licensed NFT collectibles platform by Dapper Labs (on the Flow blockchain) where users buy, collect, and trade limited-edition NBA highlight “Moments,” and it became a major NFT on-ramp during its breakout in early 2021.

[5] Art Blocks - Platform for on-chain generative art, known for curated and factory drops.

[6] Dmitri Cherniak - Canadian generative artist based in New York. His Ringers series (Art Blocks Curated, 2021) is a landmark generative NFT project of 1,000 unique works.

[7] Qubibi - A project formed in 2006 by Kazumasa Teshigawara. Its name derives from the Japanese 首美, combining kubi (the joints linking head and limbs) and bi (art).

[8] Travess Smalley - An artist who works with computation to build generative image systems across painting software, computer graphics, digital images, books, drawings, and “Pixel Rugs”.

[9] Matt DesLauriers - Canadian artist based in the UK whose practice centers on code, software, and generative processes. He is also an active open-source contributor, building tools and libraries that support creative coding and generative art workflows.

[10] Feral File’s FF1 Art Computer - A small, purpose-built ‘player’ for digital and computational art that can be plugged into any HDMI display to browse and play curated playlists, including real-time generative/interactive works.

[11] 36 Points by Sage Jenson - A generative, agent-based work in which particles (“agents”) sense and alter a shared environment. It produces emergent patterns and unstable flows, echoing Primordium as matter on the verge of becoming.

[12] Metaverse Land - A persistent, shared digital environment where users interact via avatars. In Web3, it is closely tied to NFTs, virtual galleries, and immersive worlds.

[13] Frieder Nake - Early generative artist and computer scientist active since the 1960s.

[14] Peter Beyls - Artist and researcher whose work bridges computer science and the arts through generative systems across visual art, music, and interactive media. His work has been presented internationally, including at SIGGRAPH, ICMC, and ISEA.

[15] Roman Verostko - Pioneering figure in algorithmic and plotter-based art, known for writing custom code to generate pen drawings and prints, often bridging calligraphic sensibilities with computational systems.

[16] Jean-Pierre Hebert - Pioneering digital and algorithmic artist who began making computer drawings in the mid-1970s and later co-founded the Algorists in 1995, coining and defining the term through code.

[17] Hiroshi Kawano - Japanese artist and theorist trained in German philosophy and aesthetics whose early interest in semiotics and Max Bense’s “information aesthetics” led him to pioneering computer art.

[18] Giloth - Pioneering new-media/computer artist whose practice spans digital media, animation/video, virtual environments, mobile art, and installations. She was also involved in early SIGGRAPH-adjacent computer-art exhibition initiatives.

[19] Ćosić - Refers to Vuk Ćosić, a Slovenian net.art pioneer widely known for works using ASCII code (ASCII art).

[20] Raster – A cross-chain platform built to make digital art collecting more legible by bringing everything into one place: unified artist profiles showing complete oeuvres across networks and platforms, aggregated market data, and a single view of a collector’s entire cross-chain collection.

[21] OpenSea - A major NFT marketplace allowing users to buy, sell, and mint digital assets.

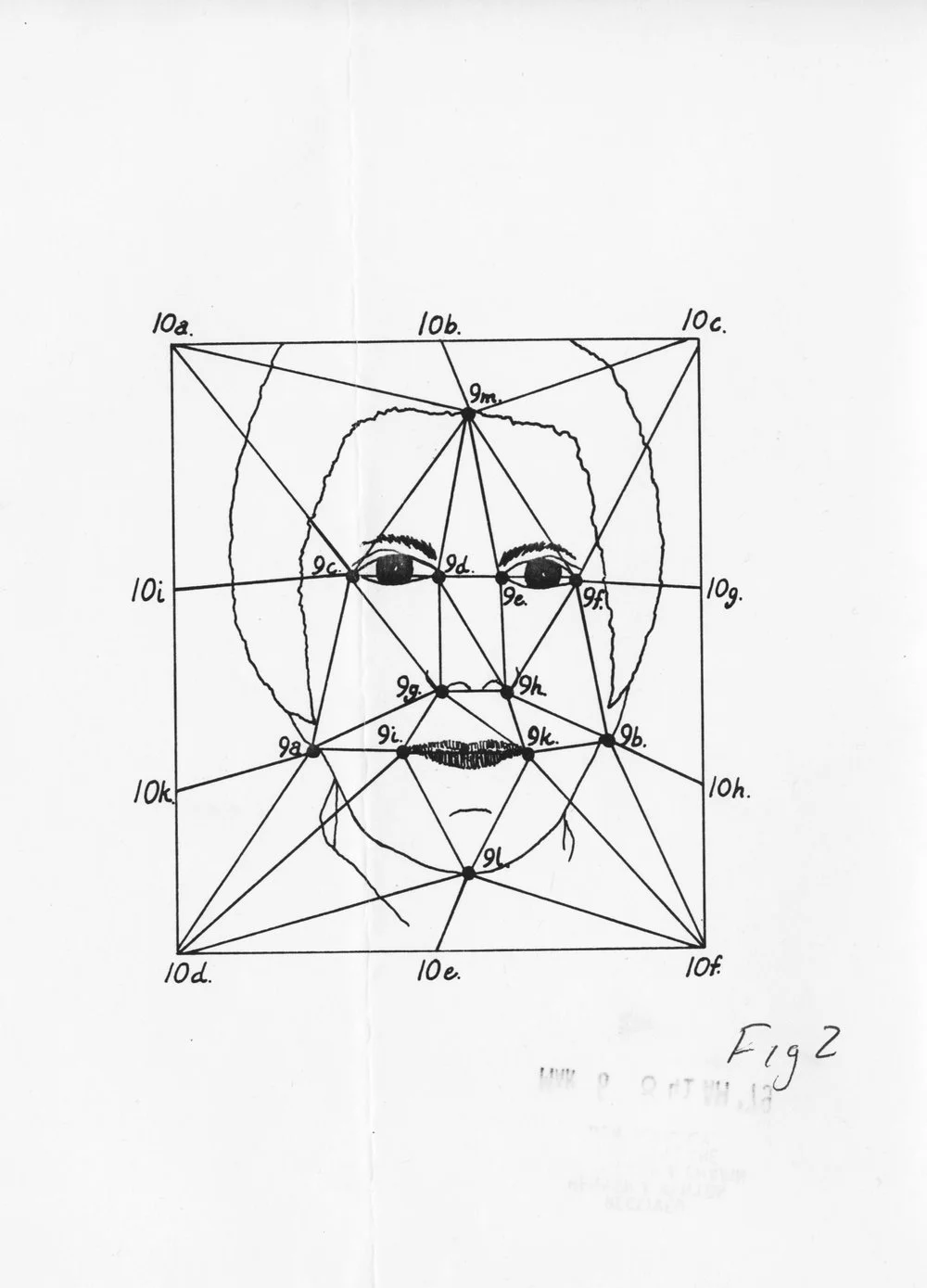

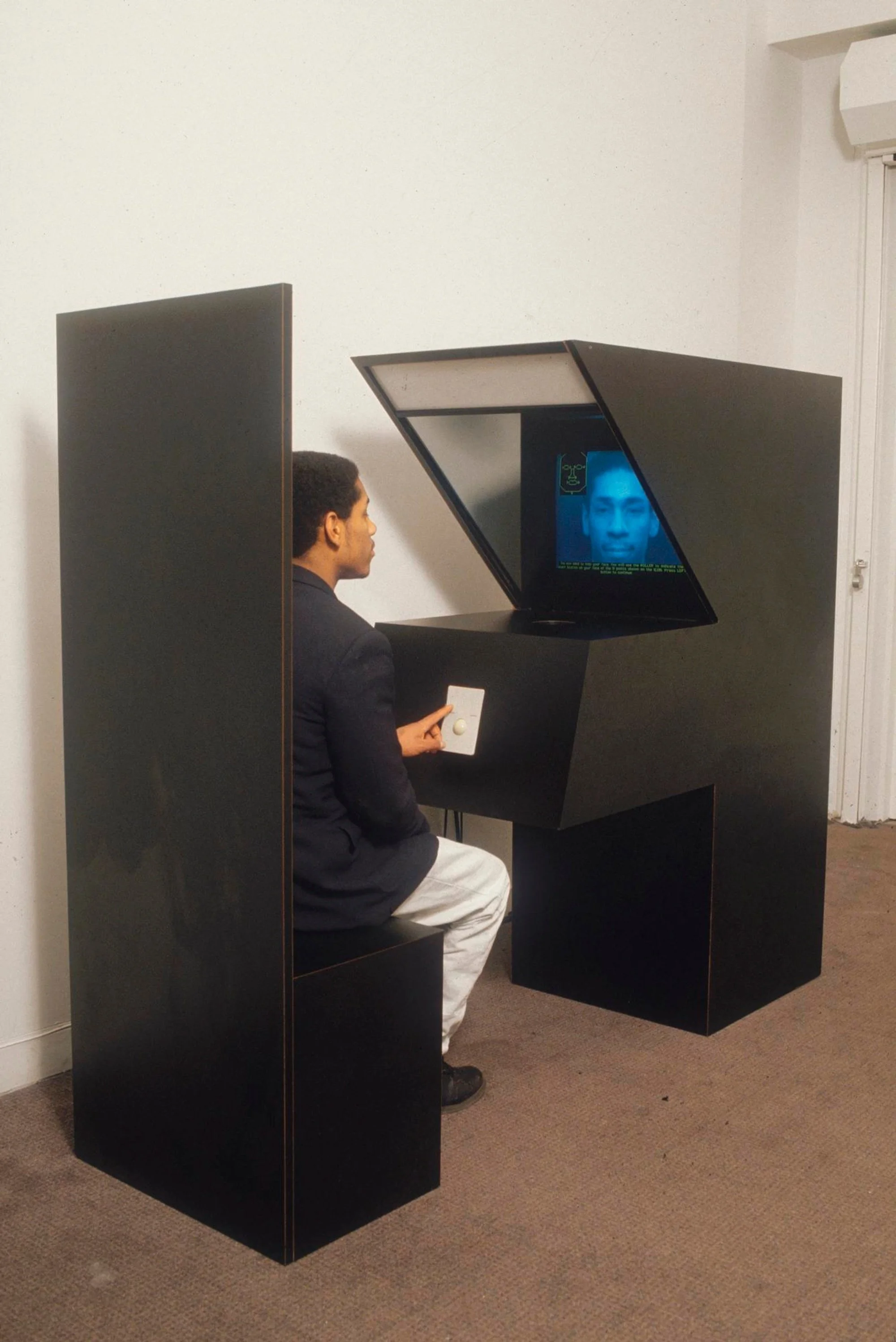

You Are Here: Decentralization, Agency, and the Politics of Participation

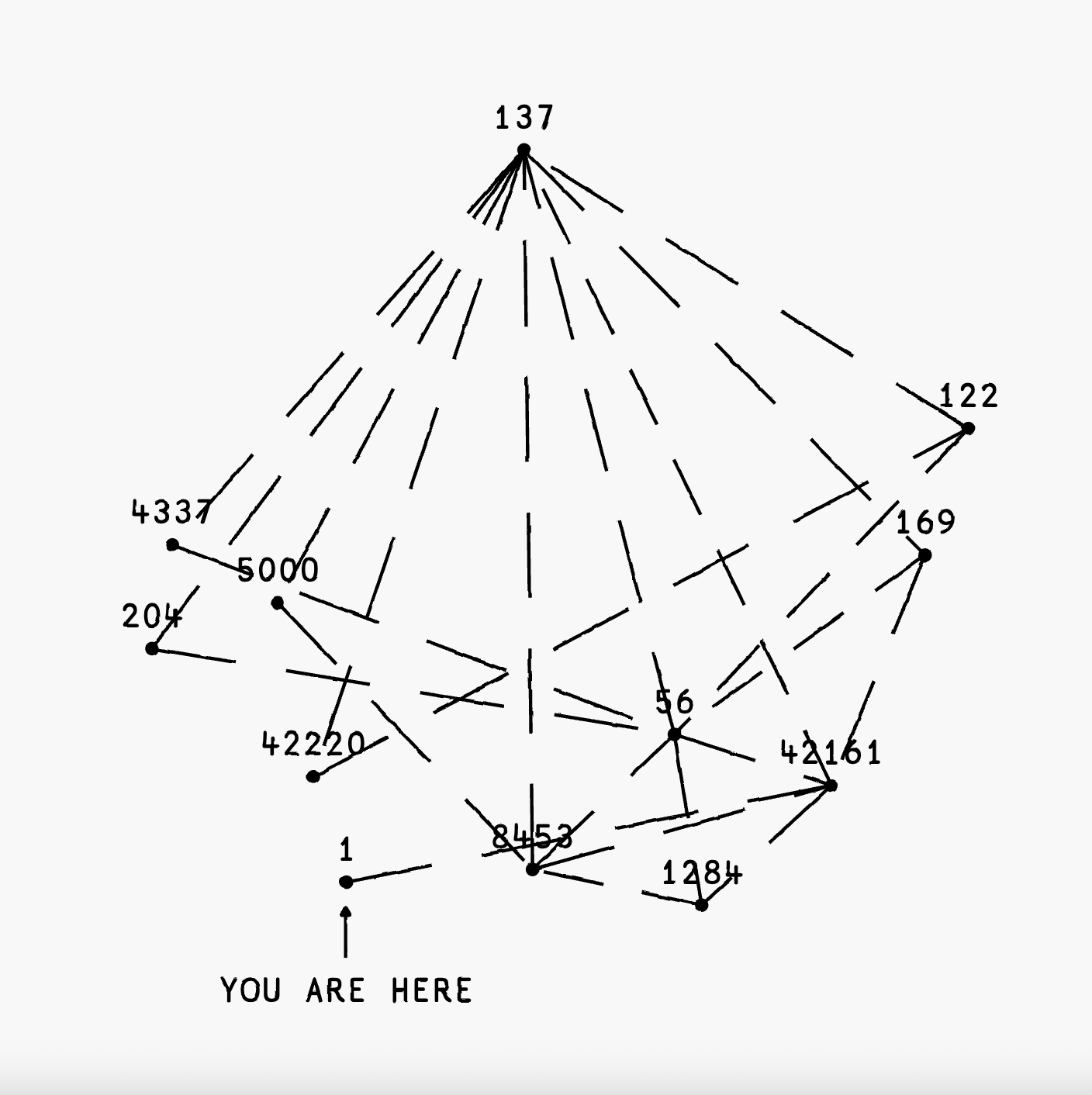

On the cover: 0xfff, You Are Here, 2024

Who decentralizes? For whom?

And what actually changes when we say that something has been decentralized?

Over the last years, decentralization has become a familiar refrain. One hears it repeatedly at global policy forums like the World Economic Forum in Davos, where it appears alongside promises of tokenized economies, digital ownership, and new models of AI governance. In white papers, panels, and pitch decks, decentralization is framed as a corrective - to centralized power, to opaque institutions, to economic exclusion. The message is often reassuring: technology will fix what politics could not.

But outside these rooms, the picture feels less certain.

In practice, I see decentralization show up unevenly. Some systems redistribute access while quietly recentralizing control. Others invite participation without offering real influence. Many depend on centralized platforms, capital, or infrastructure to function at all. This leaves me with an uncomfortable question: does decentralization, as it is currently practiced, exist beyond rhetoric? And if it does, who actually experiences its benefits - and who absorbs its risks?

Decentralization is usually described as technical infrastructure, but I have come to understand it just as much as a story we tell about power, agency, and progress. It shapes expectations before it shapes systems. To decentralize is never only to redesign a network; it is to rearrange responsibility, labor, ownership, and trust. Something is always being redistributed, even when it remains invisible at first.

This is where art becomes useful to me - not as illustration or celebration, but as a testing ground. Artistic practice is not required to resolve contradictions. It can sit with them. Artists can model speculative systems alongside real ones, exaggerate their limits, or strip them down to their assumptions. In doing so, art exposes the gap between how decentralization is imagined and how it is lived: not only whether something works, but how it feels, who it serves, and what it leaves out.

This article approaches decentralization through that lens. Rather than asking whether decentralization is good or bad, I am interested in a more practical and more difficult question: under what conditions can decentralized technologies serve their original intentions, and where do they fall short? By moving between concrete case scenarios and artistic explorations, the aim is to better understand the limits of decentralization-and what may still need to be built, reconsidered, or deliberately left behind.

The Persistent Centre: Why Decentralization Never Fully Decentralizes

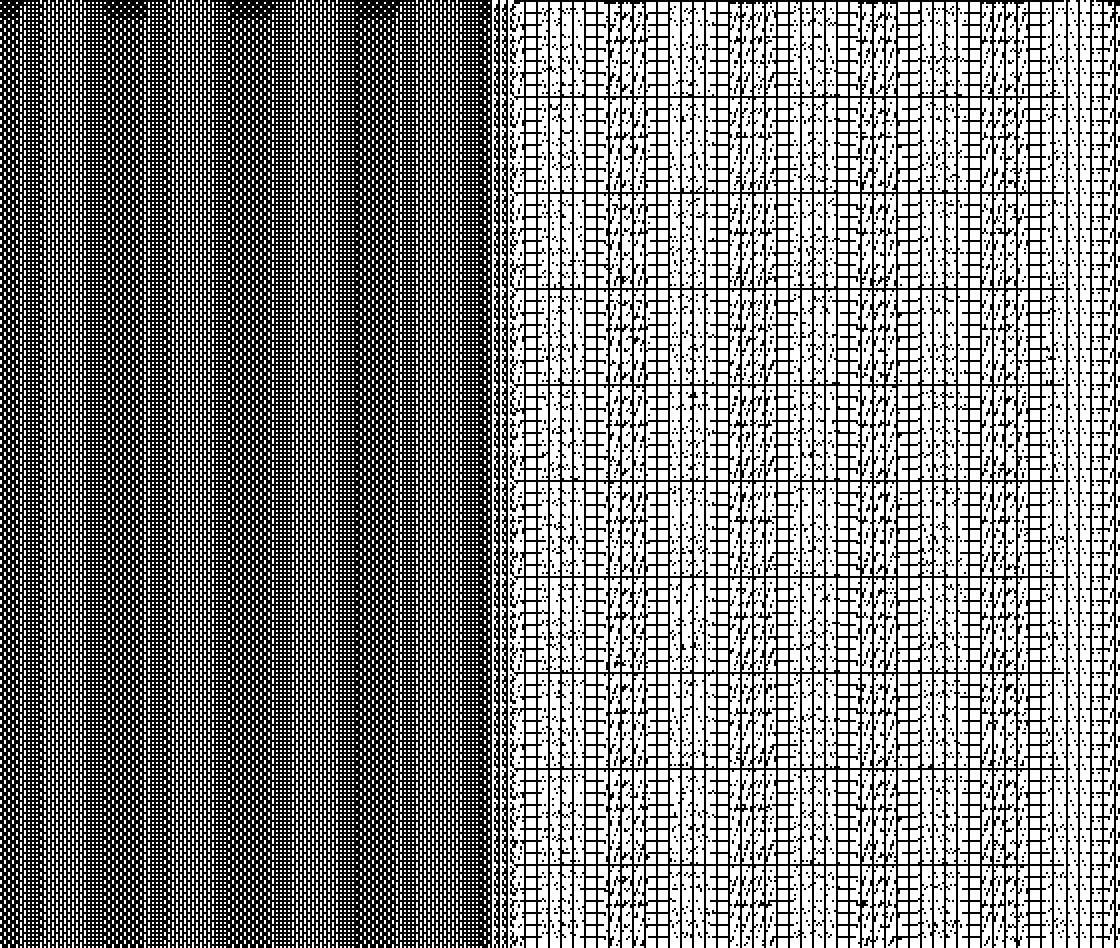

0xfff, You Are Here 42161, 2024

One promise of decentralization is the disappearance of the centre. Remove the core, distribute control, and power will flatten out. Yet in practice, the centre rarely disappears. It shifts, disguises itself, or reappears elsewhere.

Decentralization only makes sense in relation to what it claims to move away from. Even systems designed to avoid hierarchy remain organized around invisible points of gravity - capital, infrastructure, interfaces, or dominant narratives. What looks distributed on paper can feel strangely centralized in everyday use.

This isn’t only technical. It’s cultural. Many political, economic, and computational systems are built on the assumption that coordination requires a focal point. Even when authority is dispersed, legitimacy, trust, and decision-making tend to cluster. As a result, decentralization often becomes a rearrangement of control rather than its disappearance.

Blockchain makes this visible. While data may be distributed, influence often concentrates around:

• those who entered early or hold large amounts of capital,

• those with technical knowledge or control over infrastructure,

• and those who shape the story of what the system is and why it matters.

Participation expands, but authorship narrows. Responsibility spreads, but accountability becomes harder to locate.

Decentralized systems are also not immaterial. They rely on physical and institutional infrastructures: energy-intensive computation, hardware supply chains, data centers, undersea cables, and often cloud services and centralized access layers. Claims of efficiency do not eliminate these costs, they redistribute them, frequently away from those who benefit most. In that sense, the centre persists not only through capital and narrative, but through maintenance and dependency: decentralization can remove visible intermediaries while leaving the underlying responsibilities harder to see.

Simon Denny, Blockchain Visionaries, 2016.

This question of where power actually resides becomes explicit in You Are Here (42161) (2023) by 0xfff. The work distills decentralization into a deceptively simple gesture: a “YOU ARE HERE” marker tied to chain ID 42161 (Arbitrum), set within a map of other EVM chain IDs connected by traces produced through bridging. Rather than presenting blockchain as placeless or abstract, it insists on situatedness. You are not everywhere. You are here, within a specific protocol, under particular rules, dependencies, and governance assumptions. In doing so, the work makes infrastructure legible as a cultural condition. Decentralization does not erase location or responsibility; it redefines them. What appears distributed still has a centre, even if that centre is no longer visible, only felt.

Other artists make this mismatch - between how decentralization is described and how it is experienced - visible in different ways. Simon Denny’s Blockchain Visionaries (2016–ongoing) shows how “decentralized” systems borrow centralized power aesthetics, from trade-fair displays, corporate branding, founders staged as visionaries, protocols framed as inevitabilities. What Denny makes clear is that decentralization does not escape myth-making. It produces new heroes, new centres of attention, and new forms of legitimacy. The system may be distributed, but the story is tightly managed. In this sense, decentralization functions as a performance, something that must be continuously staged to sustain belief.

What Denny captures is not only a technical structure, but a perspective. He focuses on how power feels from the inside: confusing, opaque, fragmented, yet strangely directional. You may not see the centre, but you can sense its pull.

Removing a centre at the technical level does not dissolve hierarchy, without attention to culture, governance, and storytelling, decentralization can become another way of recentralizing power under a different name.

NFTs, Markets, and the Reduction of Art to Assets

If decentralization promised a rethinking of ownership, the NFT boom revealed how quickly that promise could be bent into familiar market behavior.

Between 2020 and 2022, much of the art world’s engagement with blockchain condensed into a simplified form: the JPEG as a tradable asset. What was presented as a new model of artistic autonomy often became a faster, more volatile version of the existing art market-scarcity, speculation, and visibility. The technology was new, the behavior was not.

NFTs made transactions frictionless and ownership legible, but in doing so they frequently reduced artistic value to exchange value. Context, process, care, and responsibility were sidelined in favor of speed and liquidity. Participation itself became financialized: to engage was to speculate, to belong was to buy in. Broader questions around preservation, authorship, access, governance were deferred rather than addressed.

Institutions responded cautiously. Museum acquisitions signaled openness to the medium, yet most engagements stopped at acquisition. Less frequently examined were structural questions: who maintains these works over time? What happens when platforms disappear? How are software-based artworks conserved? What does stewardship mean when ownership is distributed but responsibility remains diffuse?

This market-driven phase, however, should not be mistaken for the limits of the technology itself. Blockchain does not inherently function as a speculative asset vehicle. Used with intention, it can operate as infrastructure for trust, memory, and coordination - areas where the art world has long faced structural challenges.

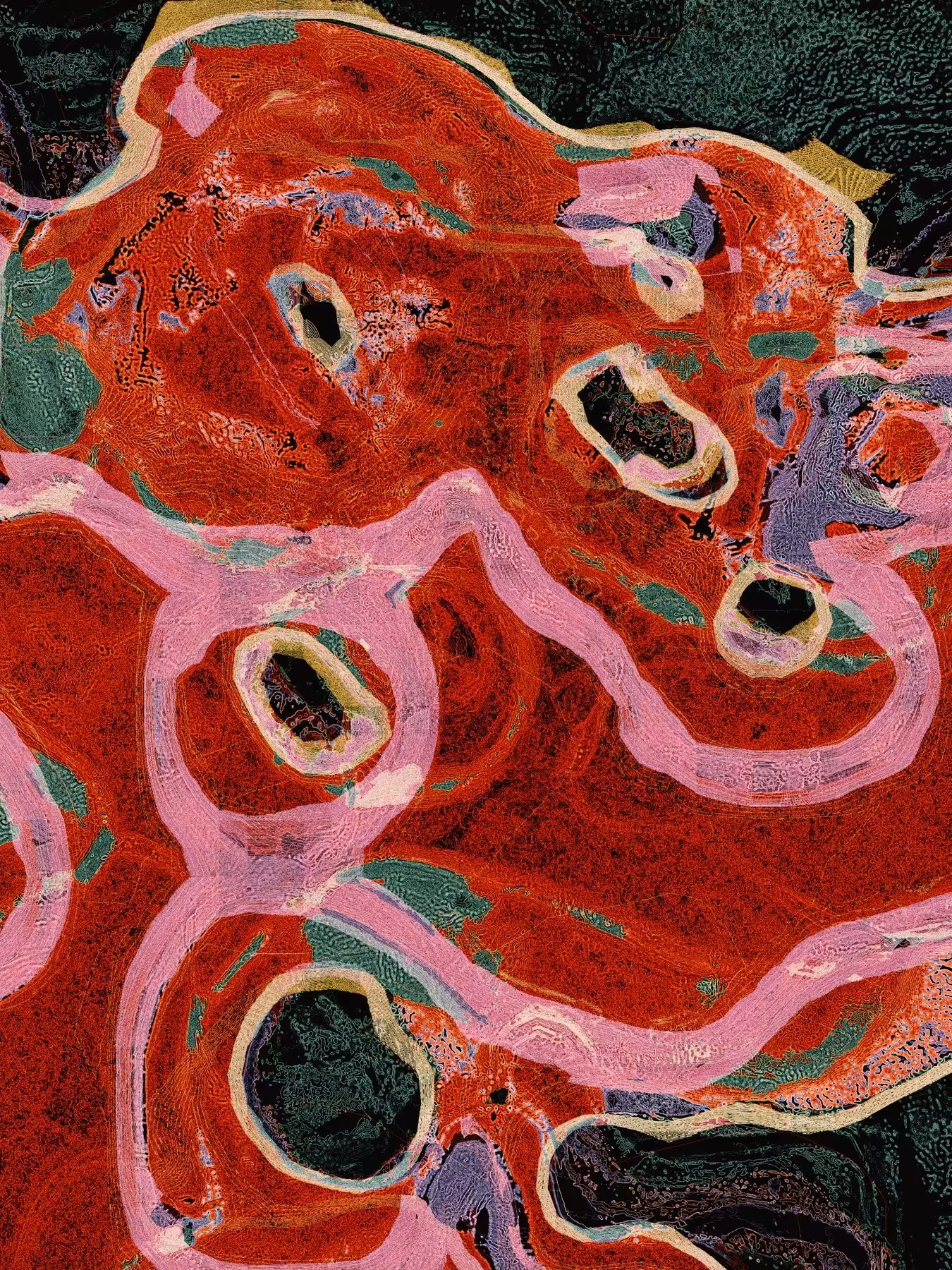

Sarah Friend, Life Forms, 2021.

Some artists have worked deliberately against dominant NFT logics to make this potential visible. One approach can be seen in Sarah Friend’s Life Forms (2021), which was designed to resist extraction and speculation altogether. By embedding ecological limits directly into the code, the work rejects the assumption that blockchain-based art must be monetized in order to exist. Value is not accumulated through circulation or resale, but constrained through care, duration, and interdependence. Speculation is not discouraged rhetorically; it is rendered structurally impossible.

A different strategy appears in Jack Butcher’s Full Set (2025), which operates within, rather than against, the much-criticized mechanics of Rodeo’s micro-priced, 24-hour open editions, often dismissed as disposable “digital postcards.” Instead of rejecting these conditions, Full Set uses them as material. By structuring twenty-seven works as a capped, interdependent system capable of permanent transformation once a collective threshold is reached, the project shifts attention away from the individual image toward the dynamics of coordination, accumulation, and network effects. Value emerges not from scarcity alone, but from how platform rules, collective behavior, and time interact.

Taken together, these examples point to a more precise diagnosis. The problem was not that art entered the blockchain, but that the art world largely adopted the most reductive uses of the technology first. What these works demonstrate is that different value systems are possible, whether through refusal, constraint, or strategic engagement with existing platforms. The challenge now is not to abandon decentralized tools, but to reorient them away from short-term market optimization and toward longer horizons of care, responsibility, and cultural preservation.

Jack Butcher, Full Set, 2025.

Games, Gamification, and the Illusion of Agency

Almost everything today carries the logic of a game. Finance is gamified through dashboards, points, and quests. Political participation is reduced to likes, shares, and viral moments. Work, wellness, and social life are increasingly structured around incentives, progress bars, and rankings. This is more than a design language. It is a way of coordinating attention, motivation, and organizing behavior.

Games, in this sense, offer a useful lens for thinking about decentralization. They promise freedom, experimentation, and choice, yet they are always bounded systems. Rules are defined in advance, outcomes are constrained within carefully designed parameters. Players act freely, but never outside the logic of the game itself. Precisely because of this tension, games and simulations make visible how agency is structured, measured, and governed.

Blockchain’s entry into gaming therefore feels intuitive. Games already rely on virtual assets, internal economies, scarcity, and forms of ownership. In theory, blockchain infrastructures could extend these dynamics by allowing players and creators to retain value, participate in governance, and shape the worlds they inhabit. In practice, however, decentralization in games has so far been applied more successfully to assets than to authority. In games such as Axie Infinity, players own characters as NFTs and can trade them freely. Yet the deeper structures of the game - token supply, reward mechanisms, economic balance remain centrally designed and adjusted. When the in-game economy expanded and later contracted, players experienced the consequences directly, while decision-making remained largely out of reach. Ownership became distributed, but responsibility stayed diffuse.

Related dynamics can be observed in metaverse platforms such as The Sandbox and Decentraland. Land, avatars, and objects are tokenized, and governance mechanisms formally exist. Yet meaningful influence often correlates more strongly with capital concentration than with participation. Players contribute time, creativity, and social energy, generating value through presence and interaction, while the systems that define that value remain difficult to reshape collectively.

Across these examples, a consistent pattern appears. In games, decentralization is most often applied at the level of assets, while authorship over the system itself remains limited. Interfaces signal decentralization, yet decision-making continues to concentrate beneath the surface. This does not negate the potential of blockchain in games, but it raises abother question: why has decentralization been implemented so narrowly?

The same infrastructures that make engagement legible, comparable, and exchangeable could support other arrangements. What would it mean to extend decentralization beyond ownership, into governance, rule-making, and long-term stewardship? Rather than optimizing participation for short-term incentives, games could be designed as shared systems - ones in which players meaningfully influence economic parameters, assume responsibility collectively, and shape worlds that evolve over time.

In this sense, the question is not whether games should be decentralized, but how. Artistic games offer important clues. Rather than rehearsing utopian futures, they rehearse awareness.

Axie Infinity developed by Sky Mavis, 2018.

Across these examples, a consistent pattern appears. In games, decentralization is most often applied at the level of assets, while authorship over the system itself remains limited. Interfaces signal decentralization, yet decision-making continues to concentrate beneath the surface. This does not negate the potential of blockchain in games, but it raises abother question: why has decentralization been implemented so narrowly?

The same infrastructures that make engagement legible, comparable, and exchangeable could support other arrangements. What would it mean to extend decentralization beyond ownership, into governance, rule-making, and long-term stewardship? Rather than optimizing participation for short-term incentives, games could be designed as shared systems - ones in which players meaningfully influence economic parameters, assume responsibility collectively, and shape worlds that evolve over time.

In this sense, the question is not whether games should be decentralized, but how. Artistic games offer important clues. Rather than rehearsing utopian futures, they rehearse awareness.

Narratives, Ecologies, and Care

Decentralized systems are not immaterial. They rely on physical infrastructures: energy-intensive computation, mineral extraction for hardware, data centers, undersea cables, and cloud services. Claims of efficiency or scale do not eliminate these costs; they shift and redistribute them, often geographically and politically away from those who benefit most. When decentralization is discussed primarily in terms of access or autonomy, its material footprint tends to disappear from view, allowing extractive logic to re-enter under new technical forms rather than being fundamentally challenged.

The question, then, is not whether decentralization reduces visible intermediaries, but whether it makes responsibility legible.

What would it mean to design decentralized systems that account not only for participation, but for energy use, maintenance, authorship, and long-term care? This may require slowing systems down, prioritizing transparency over scale, and treating infrastructure as an ethical choice. In this sense, decentralization becomes less about removing centres and more about making dependencies visible and deciding, collectively, which ones we are willing to sustain.

At the same time, decentralization operates powerfully through narrative. I often encounter it first not as a protocol, but as a story: autonomy, participation, collective ownership. These narratives generate legitimacy and sustain trust long before anyone engages with the underlying system, often compensating for limited avenues of actual decision-making. Decentralization becomes less a condition than a claim, something repeatedly asserted rather than fully realized.

The challenge is not to abandon these narratives, but to test them. If decentralization is invoked as a promise, it should be accompanied by mechanisms that make decision-making, responsibility, and exit visible. Without this alignment, decentralization risks functioning primarily as a legitimizing story, one that stabilizes trust without redistributing power.

In this sense, the question is not whether games should be decentralized, but how. Artistic games offer important clues. Rather than rehearsing utopian futures, they rehearse awareness.

Where Decentralized Systems Actually Succeed

Decentralization is often discussed as an ideology, but that framing is rarely useful. More precisely, decentralization functions as a coordination technology: a way of aligning incentives, belief, participation, and time across distributed networks. The most resilient systems do not succeed by eliminating intermediaries altogether, but by coordinating human behavior over long-term horizons. Value compounds when friction is reduced, incentives are coherent, and shared narratives sustain participation. In this sense, the network economy is not defined by ownership alone, but by forms of contribution, capital, attention, labor, culture, and meaning.

The strongest decentralized systems do not feel like products, they feel like movements, because they reward participants not only financially, but socially and psychologically.

At the same time, decentralization is not a universal solution. It fails when governance becomes performative, participation is simulated, or power quietly recentralizes behind opaque mechanisms.

The challenge, then, is not to decentralize everything, but to apply decentralization where openness, transparency, and collective stewardship genuinely add value, particularly in domains at the intersection of capital, culture, and community, where context, memory, and responsibility matter, and where traditional institutions struggle to scale trust over time.

Rhea Myers, Is Art (Token), 2023.

Conclusion

I believe that, the future belongs neither to centralized control nor to decentralization as dogma, but to systems that understand how humans actually behave - socially, psychologically, and over time.

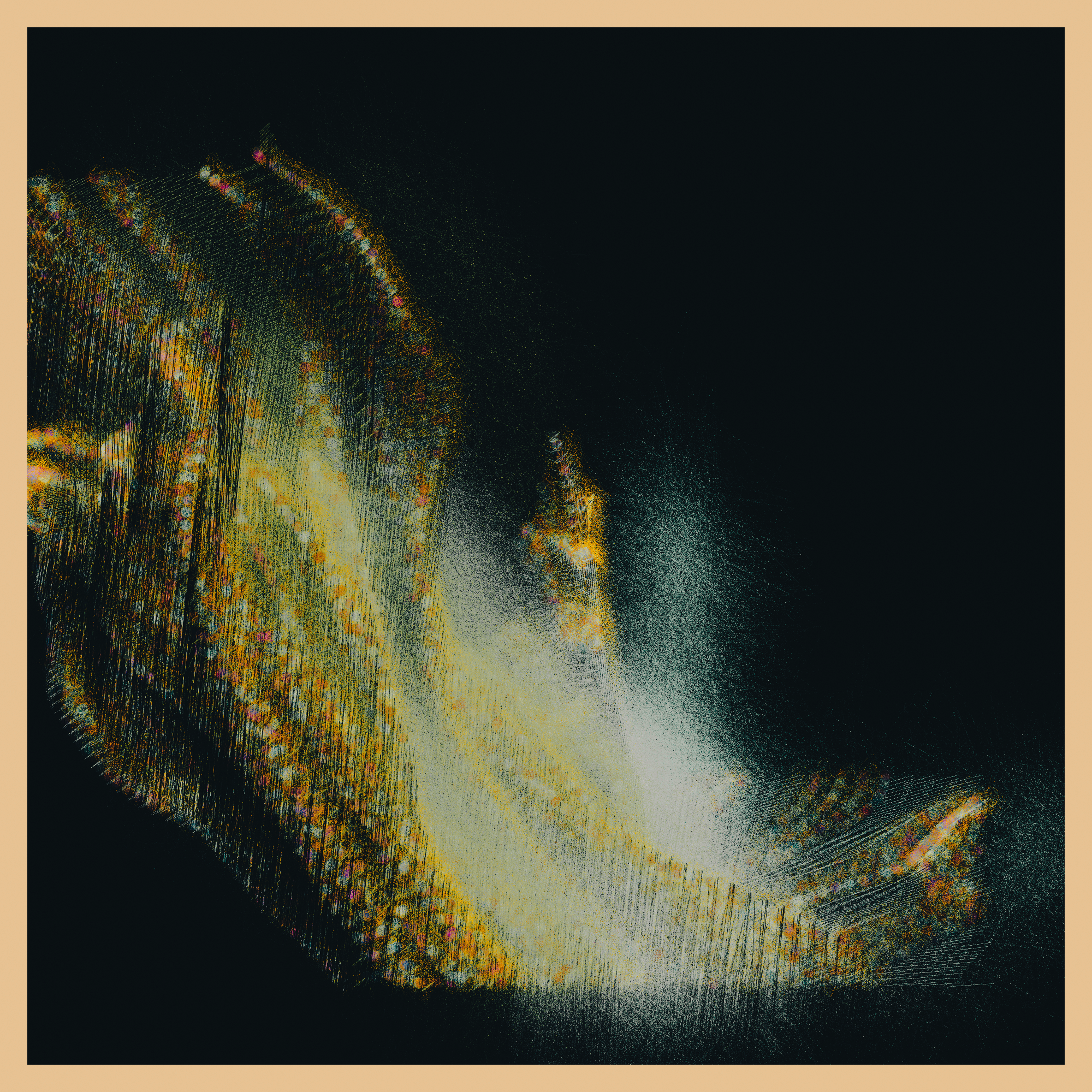

Experiments in algorithmic governance, such as those developed by Primavera De Filippi through projects like Plantoid, expose both the promise and the limits of decentralization. By embedding coordination and decision-making directly into smart contracts, these works attempt to redistribute authority structurally rather than rhetorically. Yet even here, parameters are set, interfaces frame participation, and legal and infrastructural dependencies remain. The centre does not disappear; it becomes conditional, procedural, and visible.

This is why art remains central to thinking about decentralization. Artistic practice does not simply adopt technological systems - it tests them. It slows them down, exposes their assumptions, and insists on care where efficiency would prefer abstraction. In a landscape obsessed with scale, art reminds us that stewardship is not automatic. It must be designed, practiced, and collectively sustained.

Primavera De Filippi, PLANTOÏD #15, 2014.

xxx

References & Works Discussed

The arguments developed in this essay are informed by artistic and infrastructural experiments that make decentralization tangible. Works by David Simon, aka 0xfff (You Are Here (42161)), Simon Denny (Blockchain Visionaries), Sarah Friend (Life Forms), Jack Butcher (Full Set), Rhea Myers (Is Art (Token)), and Primavera De Filippi (Plantoid) each explore, in different ways, how power, governance, ownership, and responsibility are structured within decentralized systems.

Platforms and organizations referenced include the World Economic Forum, Axie Infinity (developed by Sky Mavis), The Sandbox, and Decentraland.

Culture Doesn’t Preserve Itself. Help Us Build the Infrastructure.

Your donation helps support the ongoing independent, non-profit initiative dedicated to exploring how digital art is written, contextualised, and preserved.

Contributions fund ongoing research, development of protocol, and archival infrastructure, ensuring that the art and ideas shaping this era remain accessible, verifiable, and alive for future generations on-chain:

Donate in fiat

You can contribute via credit card here:

https://www.katevassgalerie.com/donation

Donate in eth

Send ETH directly to:

katevassgallery.eth - 0x56A673D2a738478f4A27F2D396527d779A1eD6d3

or by buying the book:

www.book-onchain.com

★Why We Look Together: CryptoPunks and the Psychology of Collective Attention

Why do thousands of people care about the same 24×24 pixel faces - and what does that reveal about how culture forms?

CryptoPunks sit at the center of our curatorial history not because of what they later became, but because of what they first demanded: a recalibration of attention.

In 2018, when we presented CryptoPunks as artworks in Perfect & Priceless, the gesture was neither symbolic nor retrospective. It marked the first time these on-chain objects were framed explicitly as art within a gallery context in Zurich - physically printed, signed, and publicly acknowledged by Matt Hall and John Watkinson not as developers, but as artists.

At that moment, nothing about CryptoPunks felt inevitable. They appeared fragile, even awkward: tiny pixelated faces, rigidly constrained, carrying a conviction that was difficult to articulate yet impossible to dismiss. Their presence resisted spectacle. Their scale resisted authority. To show them as artworks was less an act of certainty than of intuition - a sense that something structural was unfolding, even if the vocabulary to describe it had not yet stabilized.

The skepticism they provoked was not only aesthetic, but perceptual. Viewers did not yet know how to look at them - or what kind of attention they required. And yet, with time, these same images became among the most recognizable cultural artifacts of the 21st century: circulating simultaneously as artworks, avatars, status symbols, financial instruments, and historical reference points.

This essay does not approach CryptoPunks as isolated images or market phenomena. It treats them as an unusually legible case study of how culture forms under networked conditions - how attention synchronizes, how meaning accumulates, and how network power emerges over time.

Installation view of CryptoPunks: 24 unique signed prints with sealed envelopes granting access to the digital punks, Perfect & Priceless, Kate Vass Galerie, Zurich, 2018. Image Credit: Kate Vass Galerie

From Objects to Signals: How Networks Learn What to See

Under networked conditions, culture no longer forms through singular authorship or institutional approval. It forms through coordination.

Long before something becomes meaningful, it becomes visible. And long before it becomes visible everywhere, it becomes visible together. Digital culture is not persuaded into existence; it emerges through the slow alignment of attention. Shared looking precedes shared understanding. Repetition precedes belief.

This dynamic predates blockchains. Early internet culture - forums, image boards, chat rooms - already showed how meaning could emerge without permission. Memes, avatars, and in-jokes succeeded not because they were authoritative, but because people kept returning to them together. What digital systems changed was not the mechanism, but its speed, persistence, and visibility. Attention became public, measurable, and cumulative.

Long before something becomes meaningful, it becomes visible together. Image credit: Punk DAO

CryptoPunks emerged inside this logic.

Their early circulation across Twitter, crypto forums, and early Discords did not immediately produce agreement about what they were or why they mattered. What it produced was synchronized looking. Before people agreed on meaning, they agreed - often unconsciously - that these images were worth looking at together.

Collectors compared traits, debated rarity, and slowly developed a shared vocabulary. Through repetition and proximity, a grammar formed. Familiarity bred legitimacy. Recognition invited participation. This marks a crucial inversion: value does not precede attention; attention creates the conditions for value to emerge.

CryptoPunks did not go viral so quickly as they may seem. For years, they occupied a liminal state - recognized by some, ignored by many. In their interview in Collecting Art Onchain, Matt Hall and John Watkinson recall that “it was just a small group of people who were interested at the time, so it was still a pretty small scene.” (Proof of Punk: When Code Became Culture, in Collecting Art Onchain, 2025. p. 89.) But networks operate with thresholds. Once enough people realize that others are also looking, attention becomes self-reinforcing. Visibility no longer depends on novelty, but on mutual acknowledgment.

At that point, CryptoPunks ceased to function merely as images. They became social signals. To hold one, display one, or reference one was to signal participation in a shared history of looking. Their significance lies less in being early NFTs than in documenting how digital culture now learns what to see.

CryptoPunks displayed on a digital billboard in Times Square, 2021. Image credit: Alexi Rosenfeld/Getty Images

The Return of the Face: Identity After Anonymity

The rise of CryptoPunks exposes a central paradox of the digital age. Early internet culture - shaped by cyberpunk imaginaries and libertarian ideals - promised post-identity fluidity: anonymity, usernames, disembodiment, and escape from fixed social markers. Yet as decentralized systems matured, identity collapsed back into faces.

This return is not accidental. The face is humanity’s oldest social interface. Across cultures, faces anchor trust, recognition, and status. Masks, portraits, icons, and effigies have long mediated between the individual and the collective. Digital psychology consistently shows that faces trigger rapid affective responses, enabling attribution, memory, and social bonding even in abstract environments.

CryptoPunks are paradoxical objects: anonymous yet deeply personal; generic yet singular; mass-produced yet intensely identified with. They function simultaneously as masks and portraits. As avatars, they protect privacy. As faces, they enable recognition. In trustless systems - where legal identity is absent and interaction is mediated by wallets rather than names - the face re-emerges as a stabilizing symbolic anchor.

Web3, in some way, reconfigured identity. Reputation replaced biography. The avatar became a reputation container - a visual proxy through which trust, credibility, and continuity could be negotiated without revealing the person behind it. Matt Hall and John Watkinson explicitly hoped that collectors would adopt Punks as personal identities. The minimalist, video-game-inspired aesthetic enabled this shift. As they explained, “the minimalist, video game art-inspired portrait aesthetic allowed owners to proudly display their CryptoPunks as their profile pictures on social media.” (Proof of Punk: When Code Became Culture, in Collecting Art Onchain, 2025. p. 76.)

The Punk moved from image to interface.

The avatar became a reputation container, a visual proxy for trust, credibility, and continuity without revealing the person behind it. Image credit: curated.xyz

Even in systems designed to decentralize authority, humans continue to organize belonging around faces.

Seen this way, CryptoPunks belong to a much longer lineage. Roman busts marked citizenship. Funerary portraits bound identity to memory. Coins circulated authority through repetition. Heraldry compressed belonging into readable systems. In each case, the face functioned as an interface - a way to be recognized and situated within a collective.

CryptoPunks are not expressive portraits in the art-historical sense. They are recognition devices. Their power lies not in depiction, but in reliable legibility within a shared network.

Intention, Restraint, and the Metaphysics of Release

CryptoPunks emerged from an experiment with remarkably little strategic foresight: no presale, no roadmap, no royalties, no institutional backing. And yet, it produced coherence rather than collapse.

The free-to-claim mechanism was central. While technical barriers still existed, the absence of presales and whitelists delayed speculation and foregrounded participation. Ownership was motivated by curiosity rather than capital. There was no demand to perform belief, no narrative to optimize returns. The system was released - and then left alone. Looking back, Hall and Watkinson note that introducing barriers such as paid mints or royalties could have altered the project fundamentally: “making the CryptoPunks not free to claim, or attempting to charge a royalty on sales may have changed the entire trajectory of the project, or possibly even caused it to fail to take off at all.” (Proof of Punk: When Code Became Culture, in Collecting Art Onchain, 2025. p. 76.)

By refusing to over-determine outcomes, the creators allowed the network to do its own work. Trust did not emerge from authority or persuasion, but from transparent code and shared uncertainty. Participation itself became the medium of meaning.

There is a metaphysical dimension here that is difficult to ignore. In complex systems, intention reveals itself retroactively, through outcome rather than declaration. When incentives are misaligned, networks fracture. When extraction is embedded, cultures thin out. When attention is coerced, belief collapses.

The sustained coherence of the CryptoPunks network suggests that something in the initial conditions was unusually clean.

At scale, meaning no longer resided in any single Punk. It emerged from the relational field among them. Value became social rather than intrinsic. As Larva Labs later reflected, even the edition size became part of this social calibration: “the project grew into the 10,000 number and it became a kind of ‘Goldilocks’ amount; rare enough to still be precious, but numerous enough that many thousands of collectors could participate and form a community.” (Proof of Punk: When Code Became Culture, in Collecting Art Onchain, 2025. p. 79.) The success of the 10,000 model was not about numbers, but about alignment - intention, participation, and visibility without force.

The network did not grow because it was engineered to win.

It grew because it was given space to exist.

In 2022, CryptoPunk #305 joined ICA Miami’s permanent collection, where it was presented alongside American Lady by Andy Warhol. Image credit: ICA Photographer, Bob Foster

Ownership, Absence, and Cultural Maturity

This becomes most visible when listening to collectors. In conversations for Collecting Art Onchain, nearly everyone either owned a CryptoPunk, cited one as formative, or expressed regret at not having acquired one.

That regret is often misread as financial. It is cultural.

What is mourned is not missed appreciation, but missed proximity to a moment when collective meaning was still fluid. CryptoPunks function as temporal markers - signals of presence during a formative phase. Their absence remains psychologically active. Even non-owners orient themselves in relation to them.

Imagine the world without them, on-chain culture would likely still exist, but its shape would be different. Identity might have emerged later, through more complex or similar systems. Community might have formed around utility rather than faces. Meaning would have accumulated unevenly, through isolated experiments rather than a shared early moment of learning.

What becomes clear is this: CryptoPunks mattered less as objects than as catalysts. They set network effects in motion that compounded over years, not weeks. Attention led to recognition. Recognition to belonging. Belonging to memory. Memory to culture. As Hall and Watkinson themselves describe, “We think of the Punks as primarily an interactive work. It gains importance and significance due to the interactions of the people and organizations that own them.” (Proof of Punk: When Code Became Culture, in Collecting Art Onchain, 2025. p. 85.)

Seen this way, the emergence of custodial structures like NODE is not a rupture, but a consequence. Mature networked cultures begin to care for themselves — not to fix meaning, but to preserve the conditions under which meaning can continue to evolve.

CryptoPunks did not simply demonstrate a successful project.

They demonstrated what it looks like when a decentralized culture grows up.

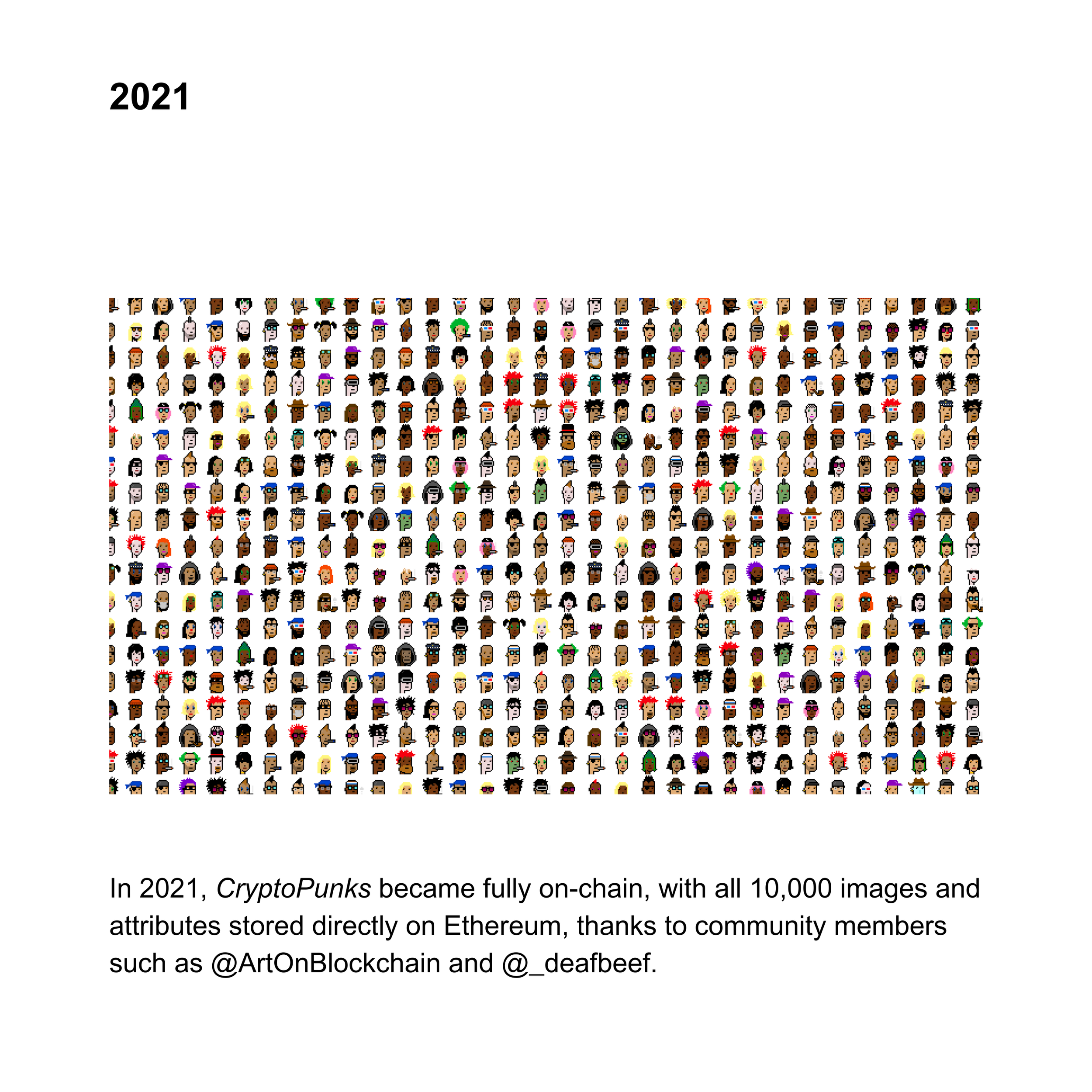

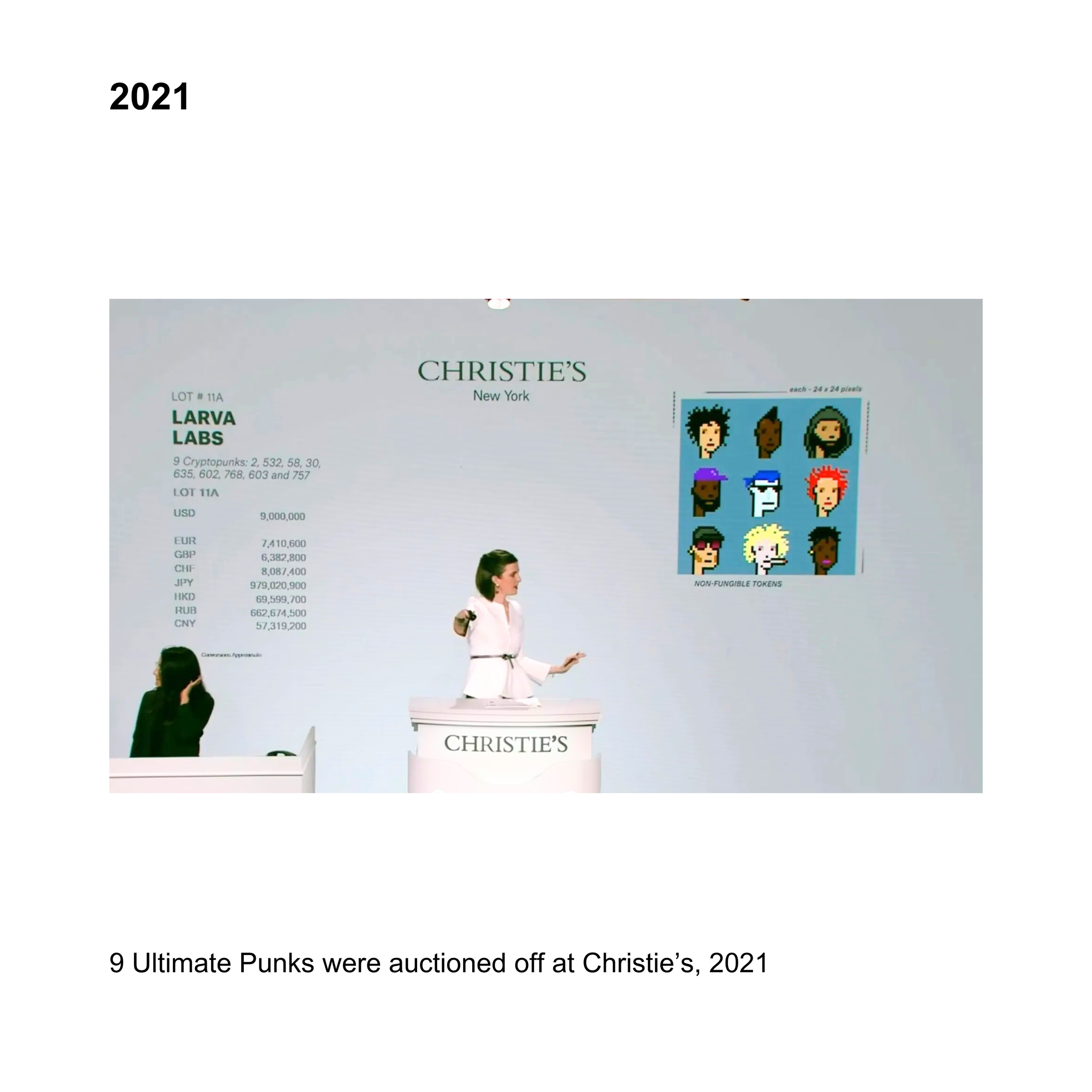

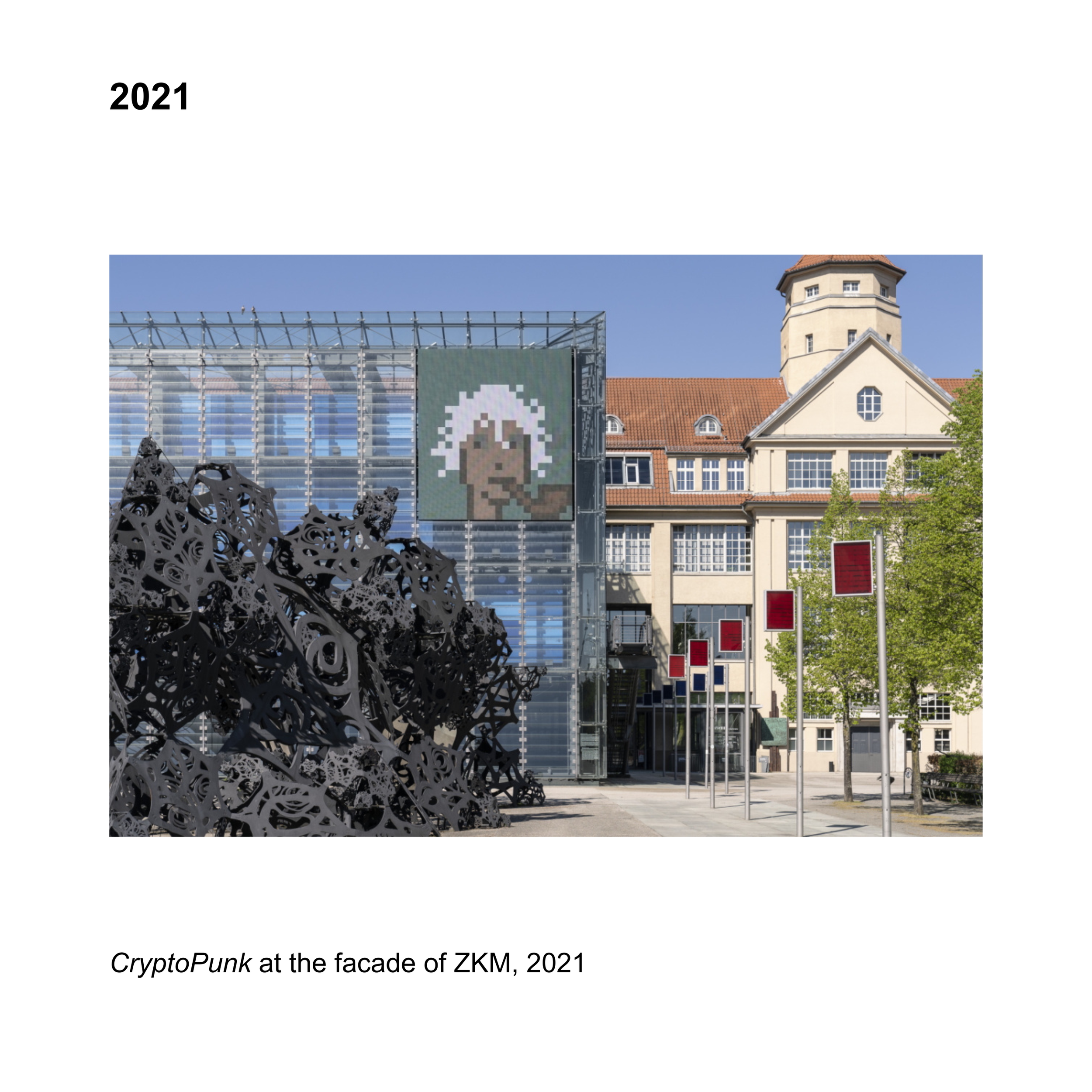

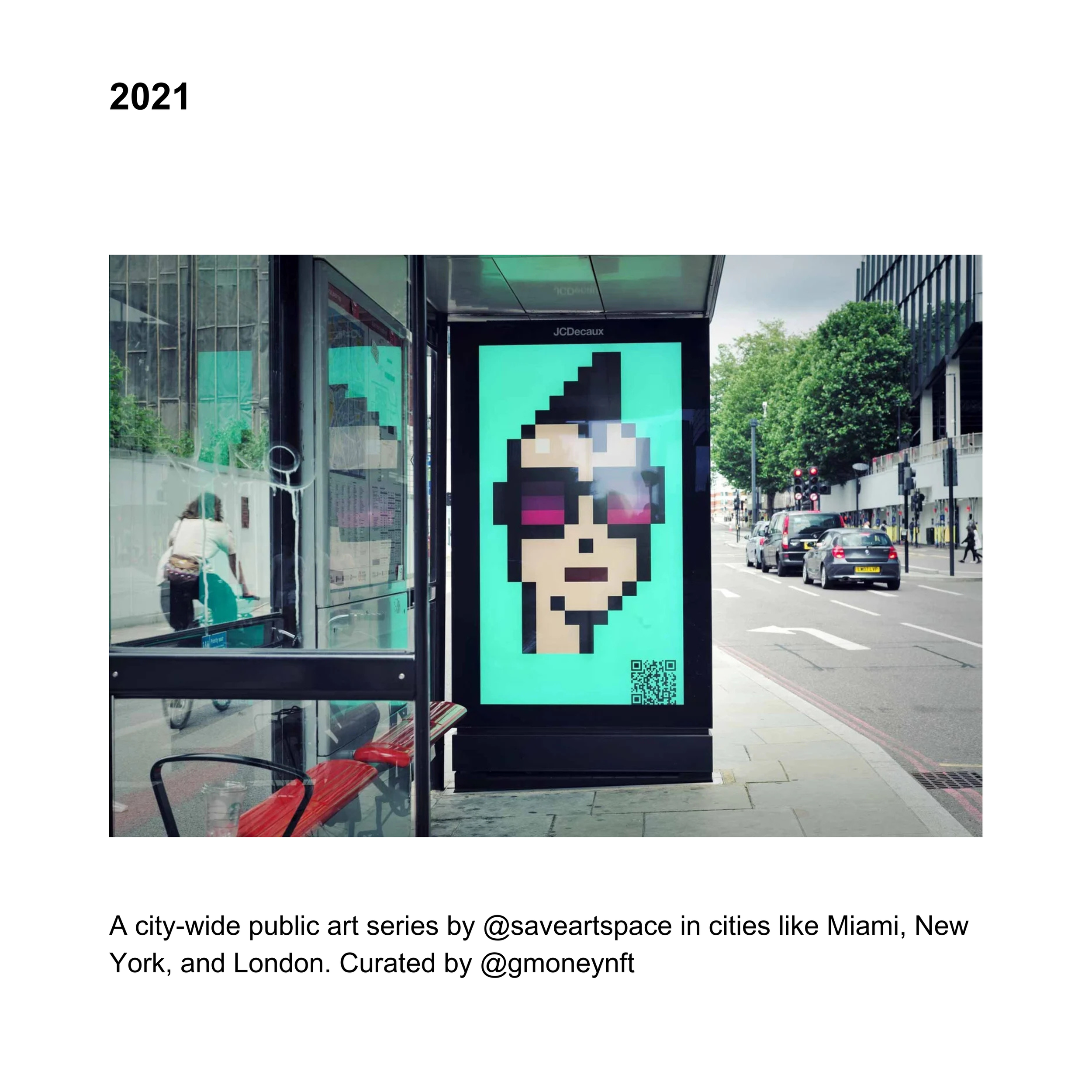

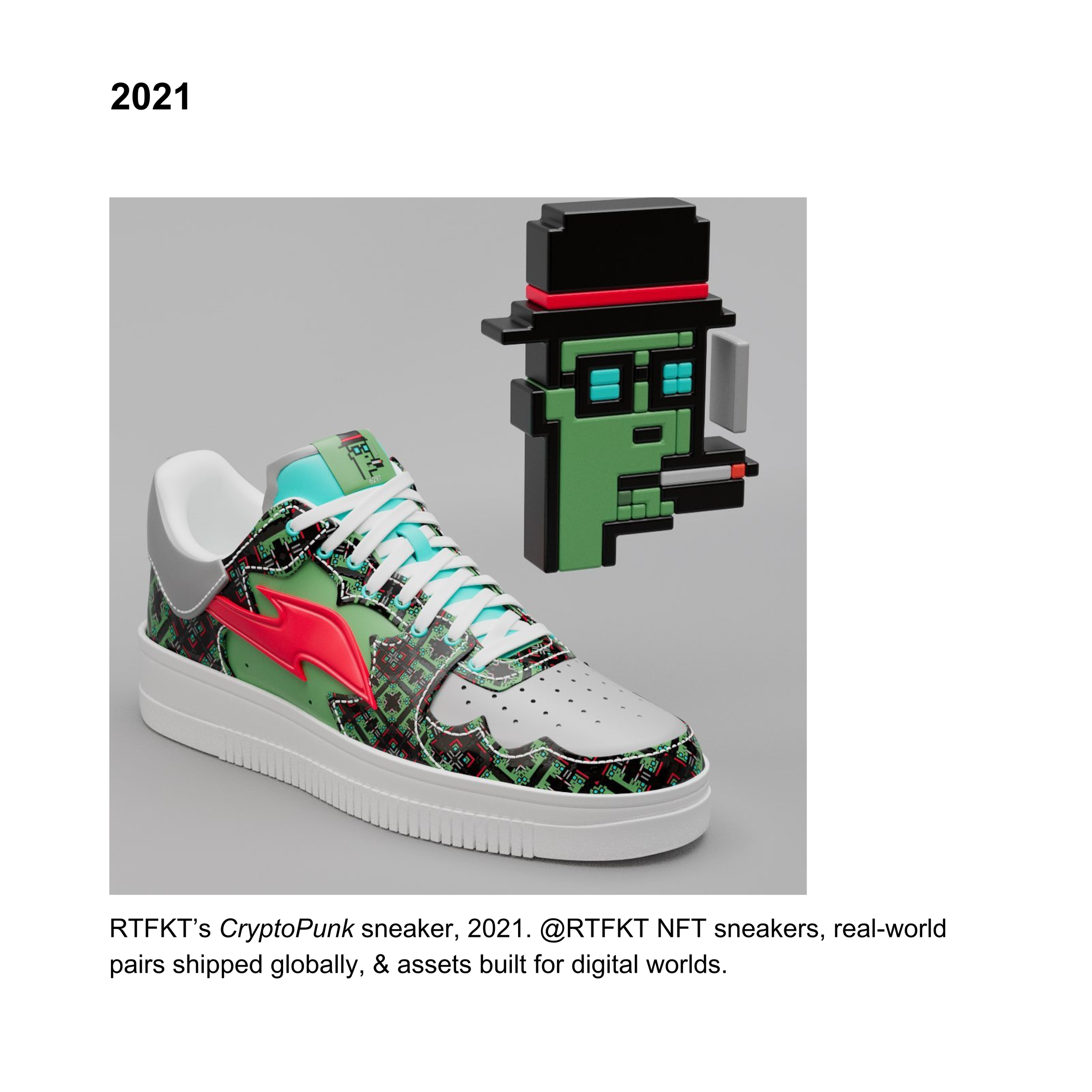

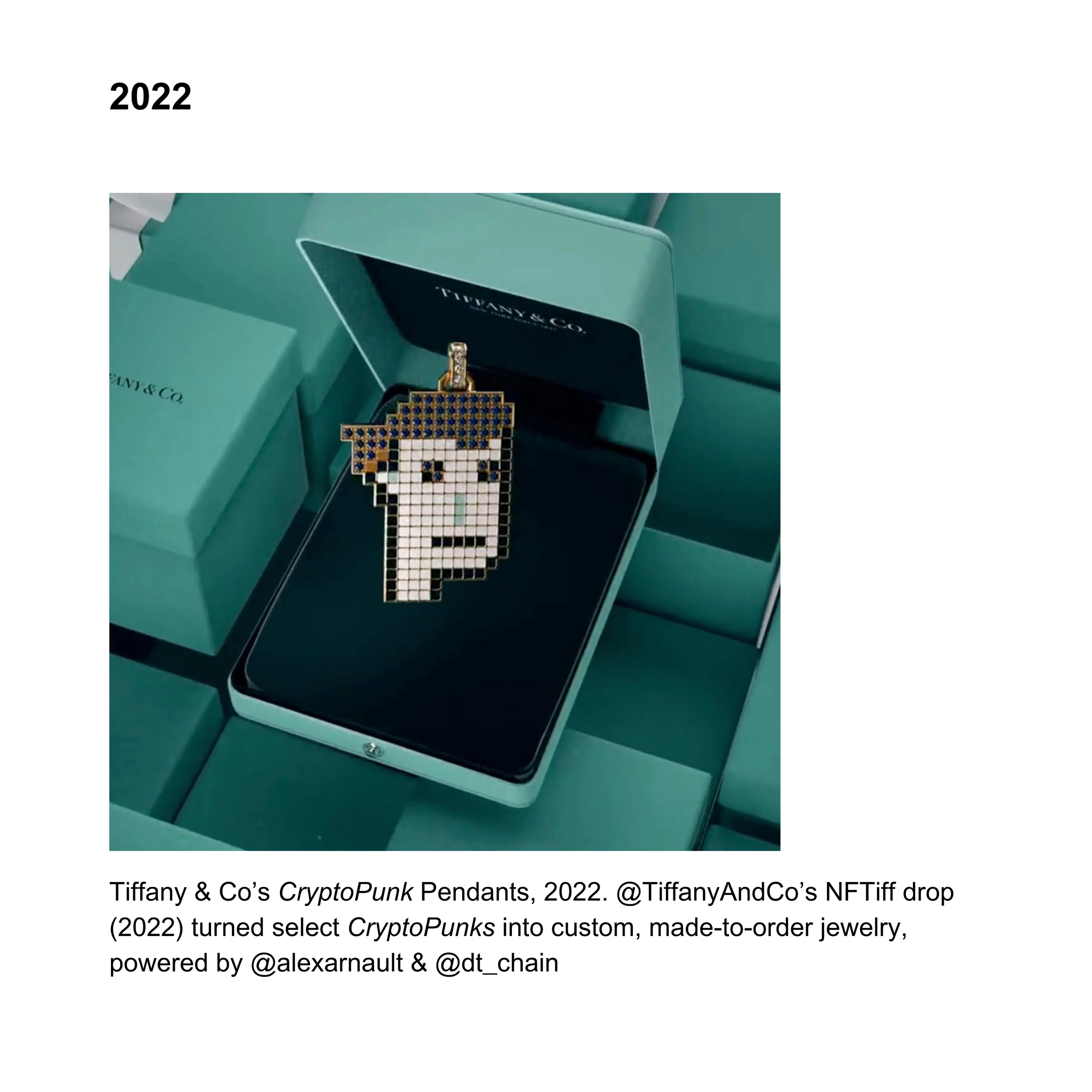

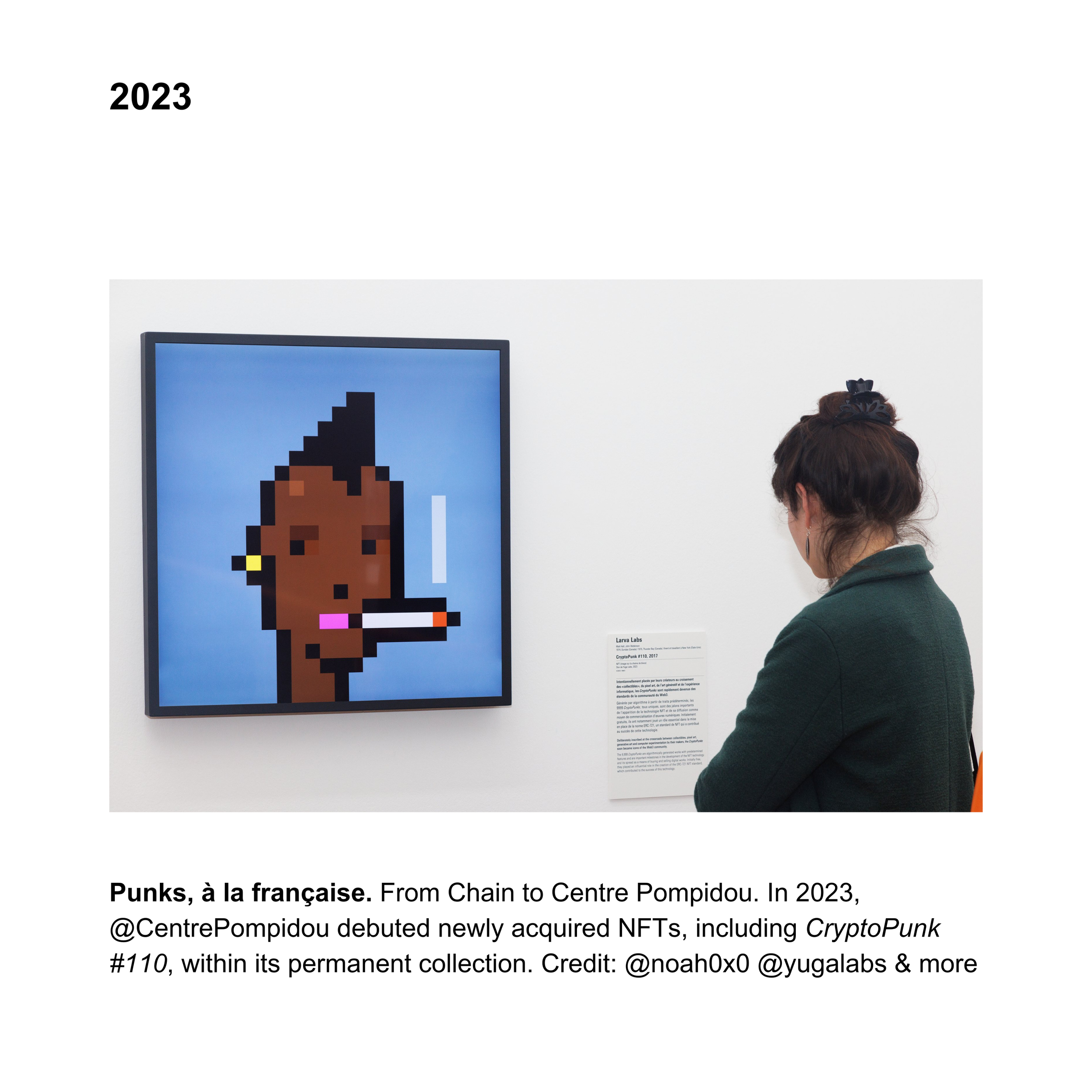

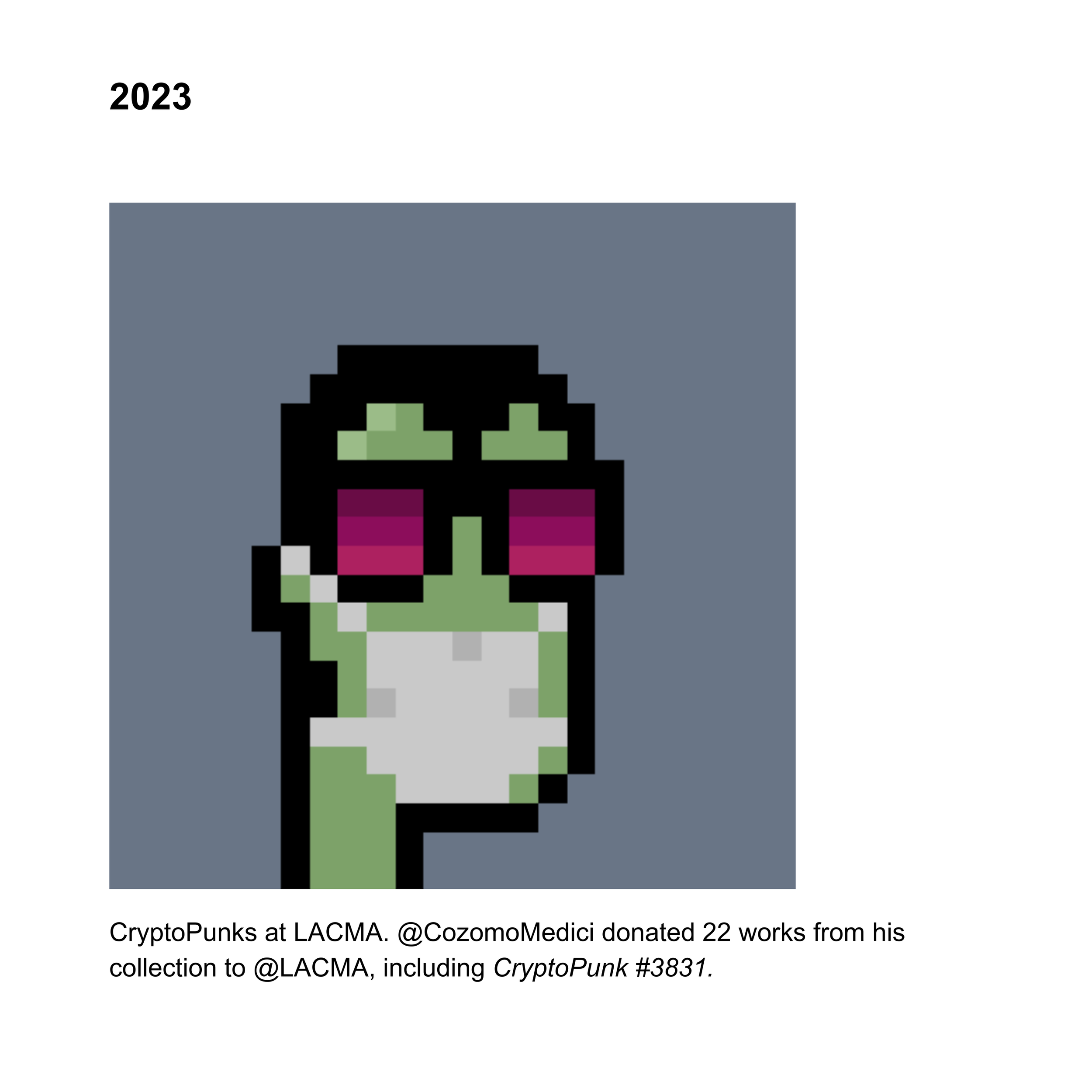

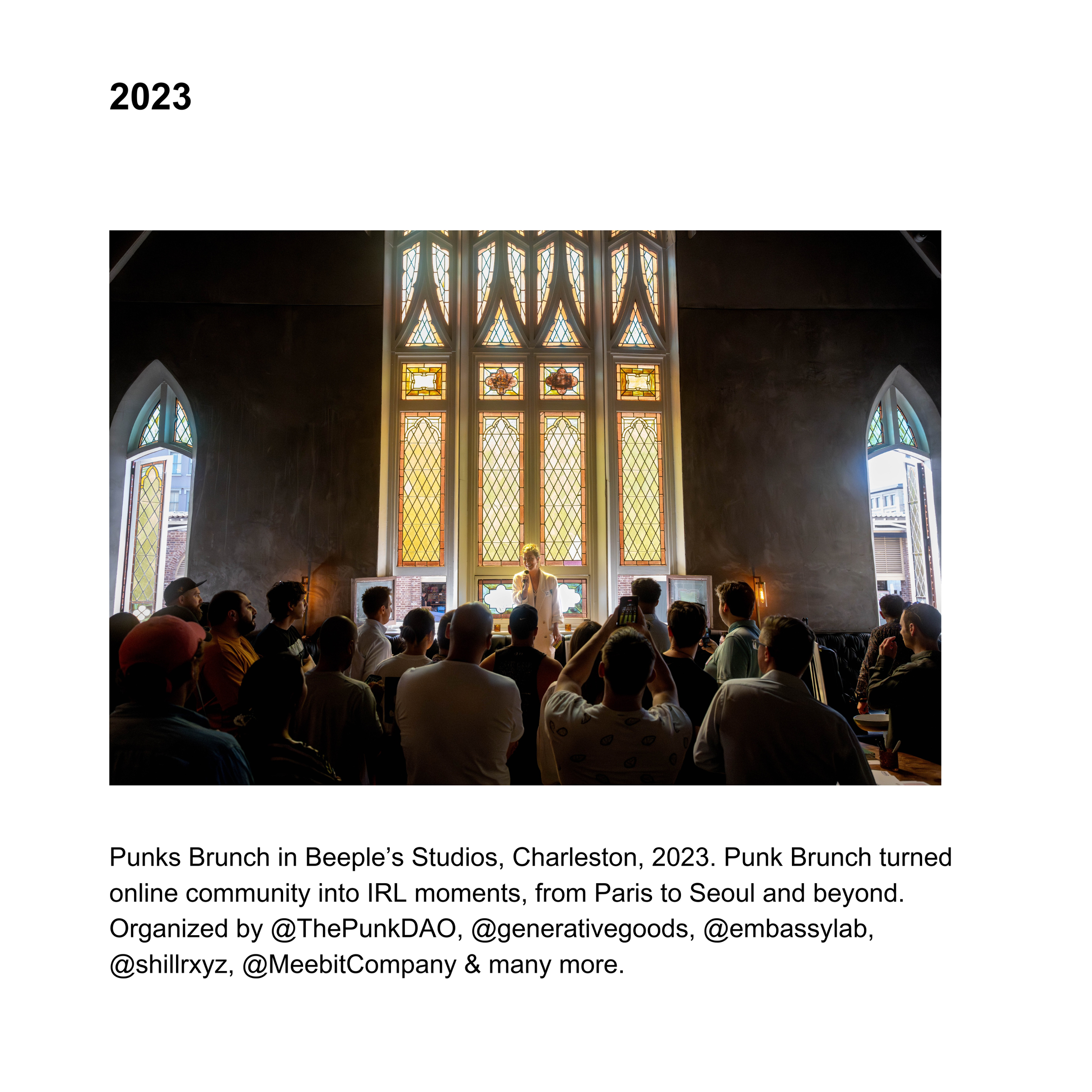

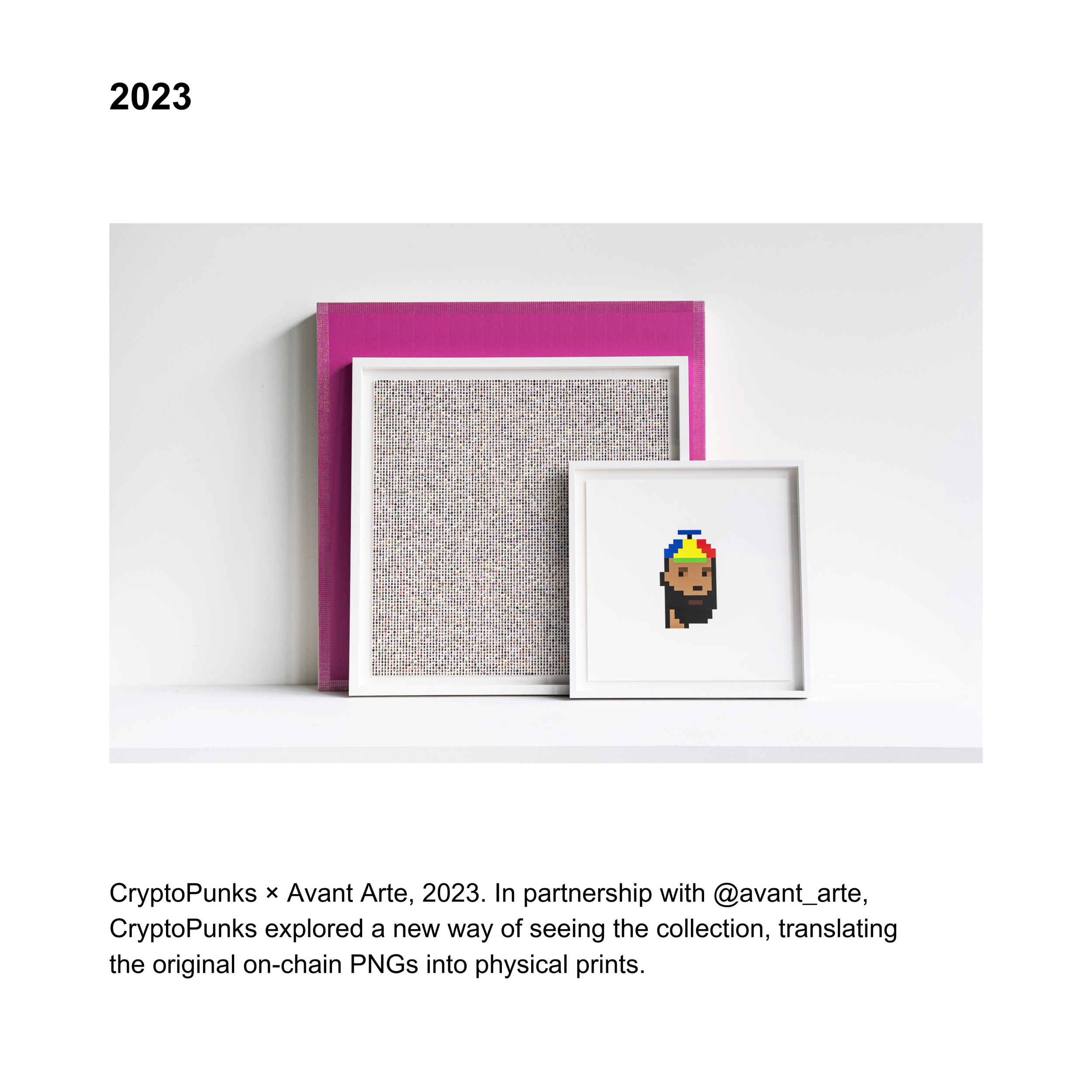

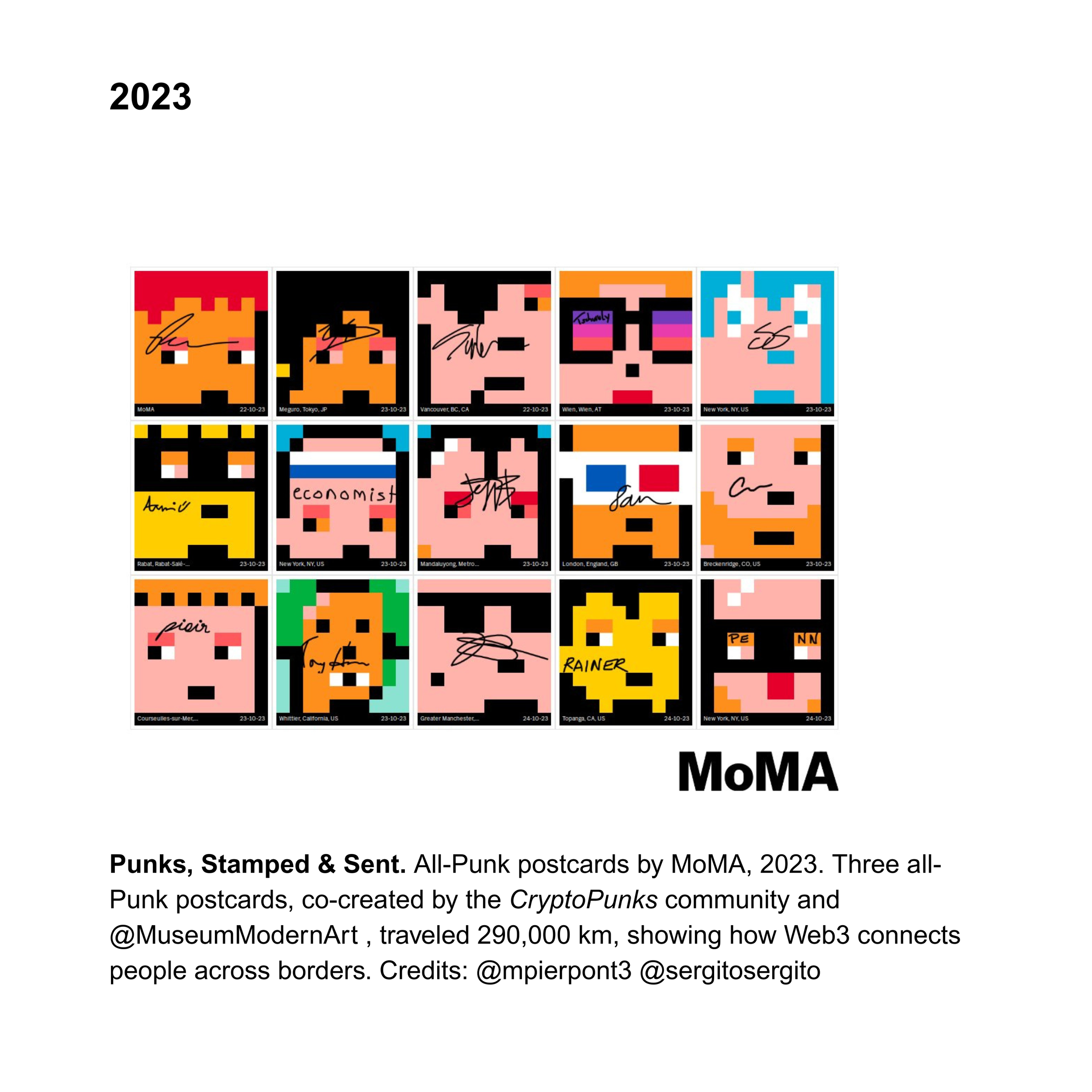

Some key milestones in the cultural life of CryptoPunks 2017-2026:

Disclaimer: This essay does not retrace the full technical or chronological history of CryptoPunks, nor the detailed formation of the NFT ecosystem around them. Instead, it focuses on how CryptoPunks came to be recognized, inhabited, and sustained as a cultural form, examined retrospectively through attention, participation, identity, and collective meaning.

A full, in-depth interview with Matt Hall and John Watkinson is published in Collecting Art Onchain, in the chapter “Proof of Punk: When Code Became Culture” (p. 70–87).

More information about the book can be found at: https://book-onchain.com/

✨ Culture Doesn’t Preserve Itself. Help Us Build the Infrastructure.

Your donation helps support the ongoing independent, non-profit initiative dedicated to exploring how digital art is written, contextualised, and preserved.

Contributions fund ongoing research, curatorial work, and archival infrastructure, ensuring that the art and ideas shaping this era remain accessible, verifiable, and alive for future generations on-chain:

Donate in fiat

You can contribute via credit card here:

https://www.katevassgalerie.com/donation

Donate in ETH

Send ETH directly to:

katevassgallery.eth - 0x56A673D2a738478f4A27F2D396527d779A1eD6d3

10,000 CryptoPunks – Opening weekend

1.23-25.2026

Palo Alto

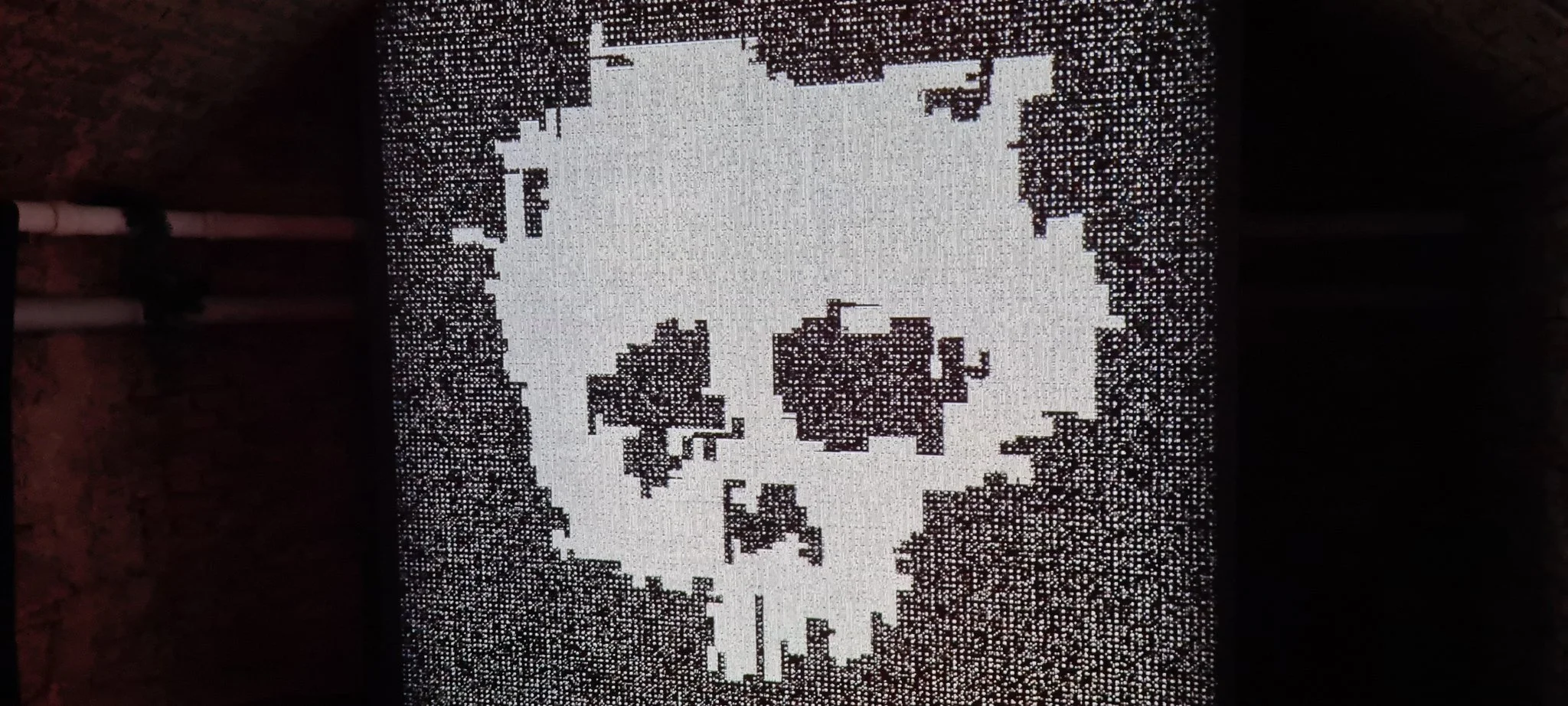

NODE opens with 10,000, the first exhibition devoted to CryptoPunks, created by Matt Hall and John Watkinson (Larva Labs) in 2017. CryptoPunks is among the most influential works of digital art ever produced; it introduced a new paradigm for authorship and ownership online, inspired the Ethereum blockchain standard, and marked a cultural shift in how identity, provenance, and value can exist in purely digital form. 10,000 presents CryptoPunks as a living artwork: a self-running system whose marketplace is foregrounded as an integral part of the art – every offer, bid, sale, and transfer is both an economic event and an aesthetic one.

Learn more about the full weekend programme here:

Are We Doomed - or Simply Miscommunicating?

(XCOPY, Mixed Signals, and the Psychology of Seeing).

XCOPY, CryptoArtLand, 2020.

In the days following Art Basel Miami’s Zero 10 activation, an Observer article reignited a familiar standoff between Web3 and the traditional art world. On one side, Web3 participants argued- once again -that critics had “missed the point” of XCOPY’s work: its critique of systems, its intentional instability, its refusal to behave like art is supposed to behave. On the other hand, traditional critics insisted they had done nothing more than describe what they encountered: a laundromat installation, millions of free NFTs, and a spectacle that vanished almost as quickly as it appeared.

But this recurring debate misses the more interesting question.

The issue is not who is right.

The issue is whether any of us -Web3, institutions, critics, collectors- truly understand how XCOPY is being communicated, and how that communication shifts as it moves across contexts.

What looks like misunderstanding may, in fact, be a structural problem of transmission.

The Paradox of Displaying Non-Canonical Art

XCOPY’s art is often discussed in relation to entropy, unreliability, and systems collapsing under their own weight, frequently understood as reflecting the volatility, speed, chaos and repetition of internet culture itself. From the beginning, it existed in unstable conditions: distributed across ephemeral platforms, endlessly looped, circulating as files that felt disposable rather than fixed. Images repeated themselves, copied, saved, reposted, and reinterpreted.

His work did not invite slow contemplation in controlled environments; it thrived on friction, volatility, and misalignment. It resisted the mechanisms through which art typically stabilizes: archival coherence, institutional framing, and the gradual smoothing of edges that accompanies canon formation.

In this sense, XCOPY’s work was not merely anti-canonical in style.

It was anti-canonical in behavior.

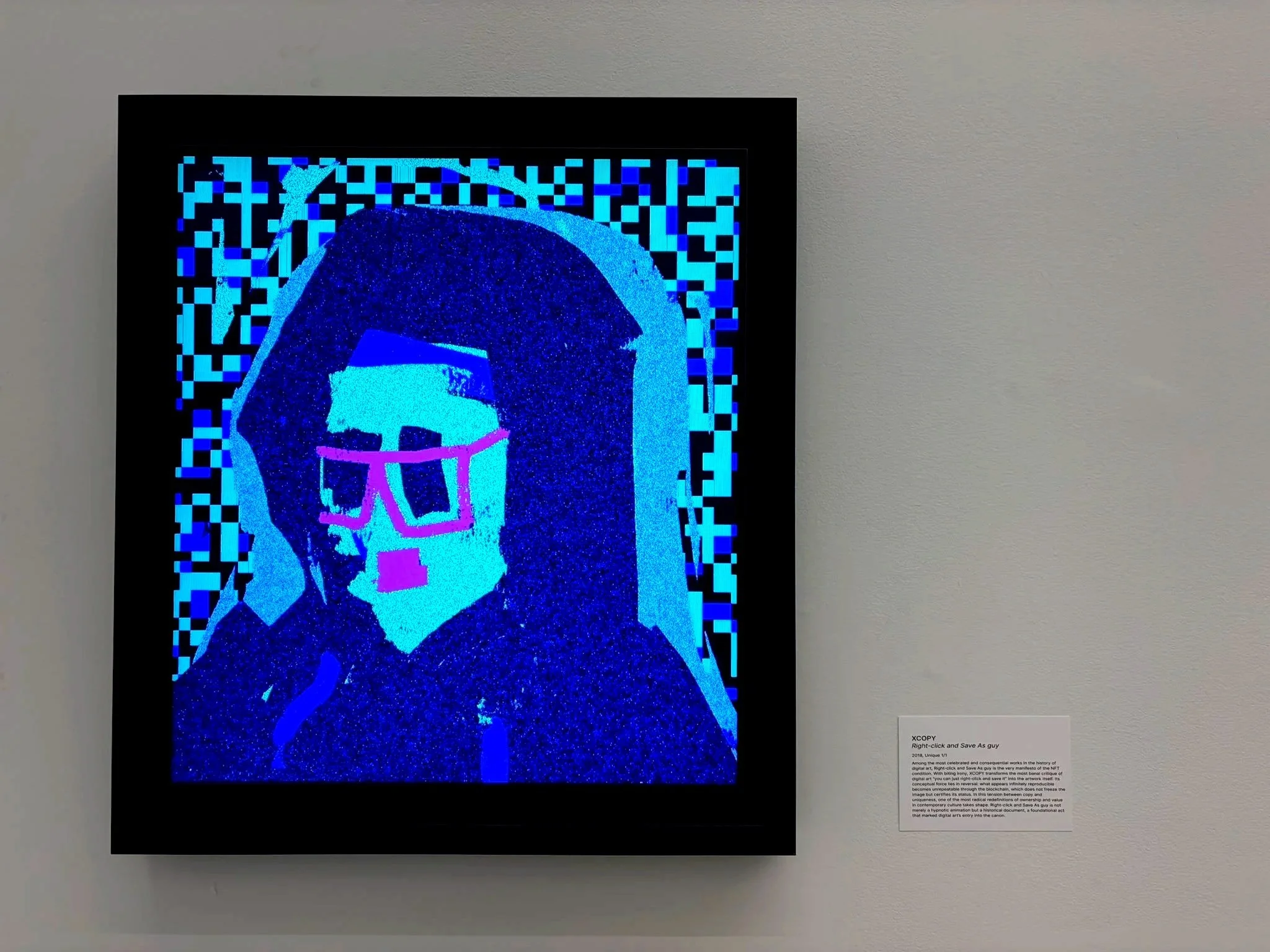

Something feels askew about seeing XCOPY, in 2025, neatly displayed on wall-mounted screens inside a physical gallery. Presenting these works as a stabilized “timeline” within a clean white-cube setting risks softening the very instability that gives them their force. This is not a critique of the display itself, but a reflection on the viewer’s perception - especially when approaching an artist whose work was conceived to disrupt canonization rather than settle into it.

The SuperRare gallery, however, stands as a meaningful exception. It carries a particular legitimacy as a kind of homecoming: the platform where XCOPY minted his early 1/1 digital works. In this context, the exhibition, Tech Won’t Save Us, reads less as institutional containment and more as an evolution- both of SuperRare and of the artist -tracing a trajectory from the digital canvas into physical gallery space.

Rather than neutralizing the work, the setting reveals an interesting expansion of XCOPY’s practice, and of the platform itself, as both move beyond their original digital parameters.

XCOPY, Right-click and Save As guy, 2018, exhibited in Tech Won’t Save Us, organized by SuperRare in collaboration with The Doomed DAO, Offline Gallery, New York.

The question is not whether this form of presentation is legitimate.

The question is more fundamental:

What does it mean to canonize anti-canonical art?

Canonization is one of the most powerful context-shifts an artwork can undergo. To canonize a work is to relocate it, to place it within new spatial, cultural, and interpretive frameworks that actively shape how it is seen.

In this sense, canonization cannot be separated from a deeper issue: how artworks change meaning as they move across contexts.

So when XCOPY appears in a white cube gallery, an underground basement, and a global art fair within the same month, radically different interpretations are inevitable.

The work has not changed.

The contexts have.

The message fractures because framing alters how the work is perceived.

XCOPY, Loading New Conflict... Redux 5, 2018.

Perception Is Never Neutral

Psychology offers a useful lens here. We do not encounter artworks as blank slates. Perception is filtered through expectation long before the eye meets the object. Cognitive science refers to this as top-down processing: the mind supplies meaning in advance, filling gaps with assumptions, cultural cues, and prior beliefs.

In other words, we rarely see what is.

We see what we expect to see.

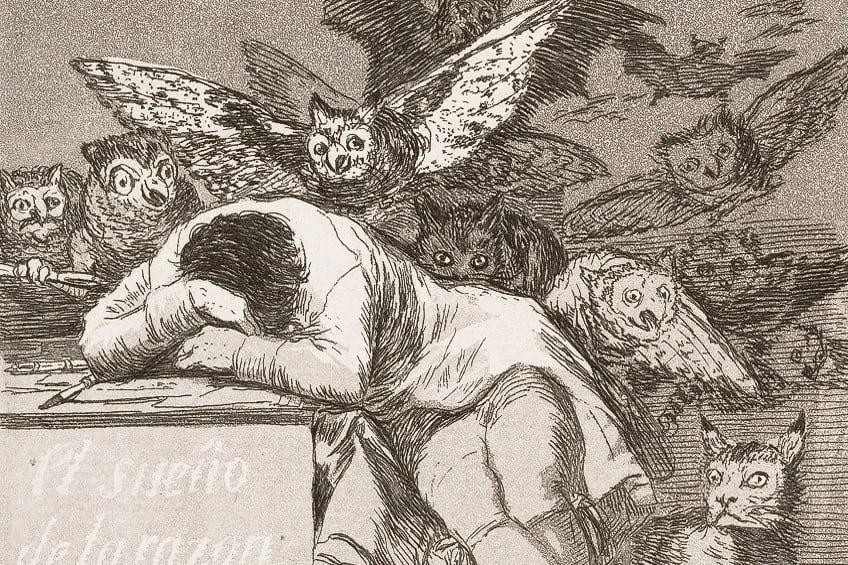

As art historian Ernst Gombrich famously observed, there is no such thing as the innocent eye. Seeing is never neutral; it is conditioned by habit, context, and belief.

From the early twentieth century, artists and theorists have challenged the idea that an artwork carries a stable meaning independent of where and how it is encountered. Marcel Duchamp’s readymades marked a decisive turning point. They demonstrated that placement alone could transform interpretation.

Context was no longer a neutral backdrop.

It became an active producer of meaning.

Marcel Duchamp, Fountain, 1917. Source: tate.org.uk

By the late 1960s and early 1970s, this expanded into a broader awareness of framing. Daniel Buren, in essays such as The Function of the Museum (1970), argued that museums and galleries do not merely display artworks, but they also actively shape how works are perceived, valued, and understood.

Meaning does not reside solely in the object. It emerges at the intersection of work, space, and expectation.

This becomes even clearer with digitally native works. They often accumulate meaning, value, and interpretation before they ever enter a physical exhibition space. The gallery or museum is not their point of origin, but one context among many. When they appear in physical form, they do not arrive as neutral objects. They arrive already shaped by prior circulation, community belief, and expectation.

What emerges is not a single meaning, but a series of encounters, each shaped by the conditions in which the work is met.

New York: “Tech won’t save us,” community might.

SuperRare was among the first environments in which XCOPY’s 1/1 works were minted, circulated, and meaningfully understood. Hosting Tech Won’t Save Us in New York, in collaboration with The Doomed DAO, is not an act of appropriation but of continuity -a homecoming that honors where both the artist and the platform began, while acknowledging how profoundly each has evolved.

Seen through this lens, the apparent tension between XCOPY’s destabilized, glitch-driven aesthetic and the gallery’s institutional calm becomes reflective rather than adversarial. What once existed, as often described, as raw disruption now carries history. What once resisted framing now tests it. The glitches remain, but they arrive with memory. The instability is intact, yet it is viewed through the accumulated weight of time, community, and belief.

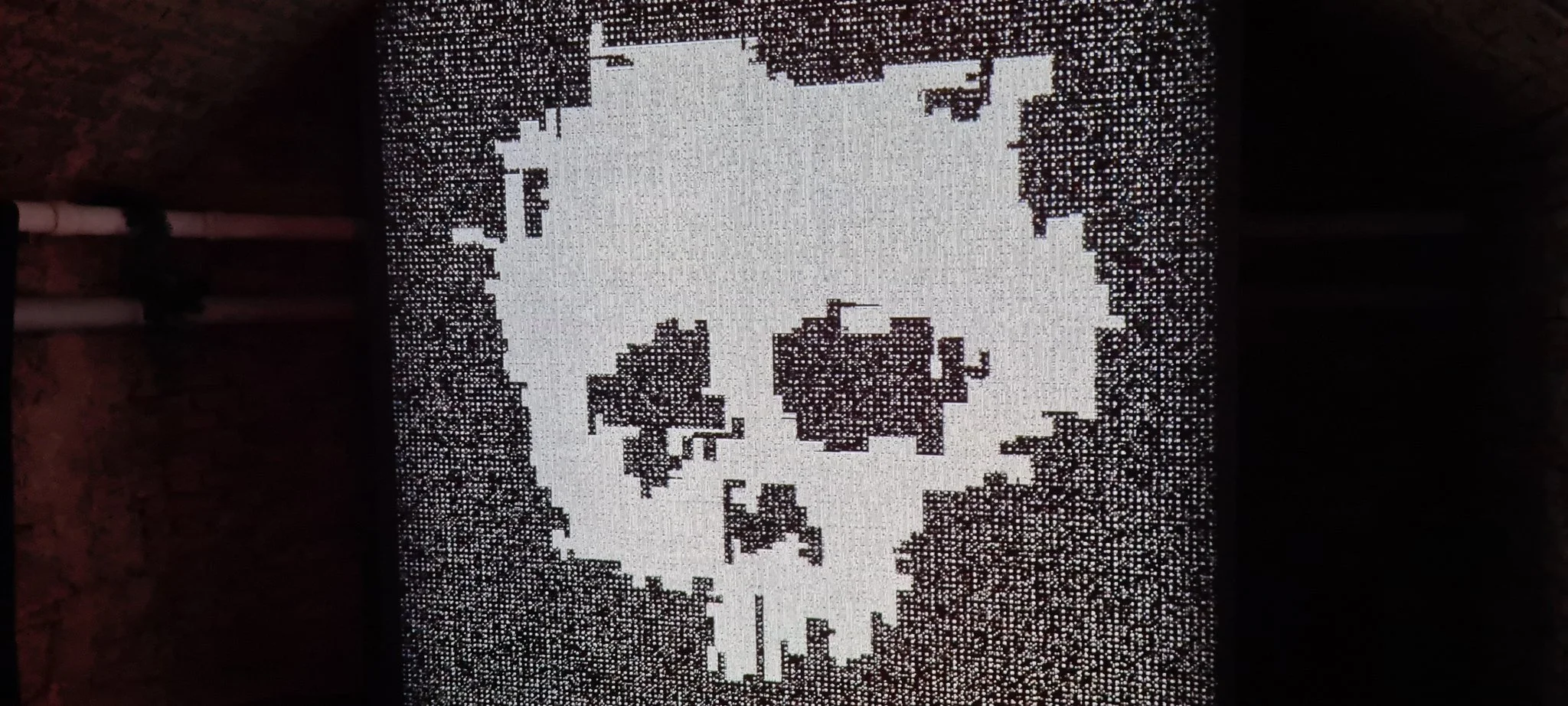

XCOPY, The Doomed (Mono), 2019, exhibited in Tech Won’t Save Us, organized by SuperRare in collaboration with The Doomed DAO, Offline Gallery, New York.

The exhibition Tech Won’t Save Us, organized by SuperRare in collaboration with The Doomed DAO, Offline Gallery, New York.

Psychologically, the gallery still activates art-historical expectation. Viewers arrive prepared to analyze, contextualize, even canonize. But here, that expectation becomes part of the work. The framing suggests permanence; the imagery resists it. The friction is not a flaw -it is evidence of change.

The exhibition’s real gravity, however, lies beyond the screens. XCOPY, SuperRare, The Doomed DAO, and a deeply committed community occupy the same space, collapsing distance between artist, platform, and audience. The artwork becomes relational not because it demands interaction, but because it is inseparable from the network that carried it forward. In this moment, the network is no longer abstract-it is embodied.

What shifts in this context is not the content of the work, but its function. The work enters the white cube already carrying its own history, beginning to operate as a cultural artifact. XCOPY’s disruption is no longer only an act of refusal, it becomes an object of sustained attention. Volatility is slowed. Instability is held long enough to be examined. The work does not lose its critical force, but it changes how it is experienced, from immediate disruption to something viewers can stay with and examine.

Perception still diverges:

Some viewers see canonization.

Some see contradiction.

Some see celebration.

All are correct.

And perhaps that, too, is a measure of how time changes us—not only the artist and the platform, but the way we learn to see, and what we believe art should be.

Vienna & Melbourne: When Art Becomes a Signal Rather Than an Object

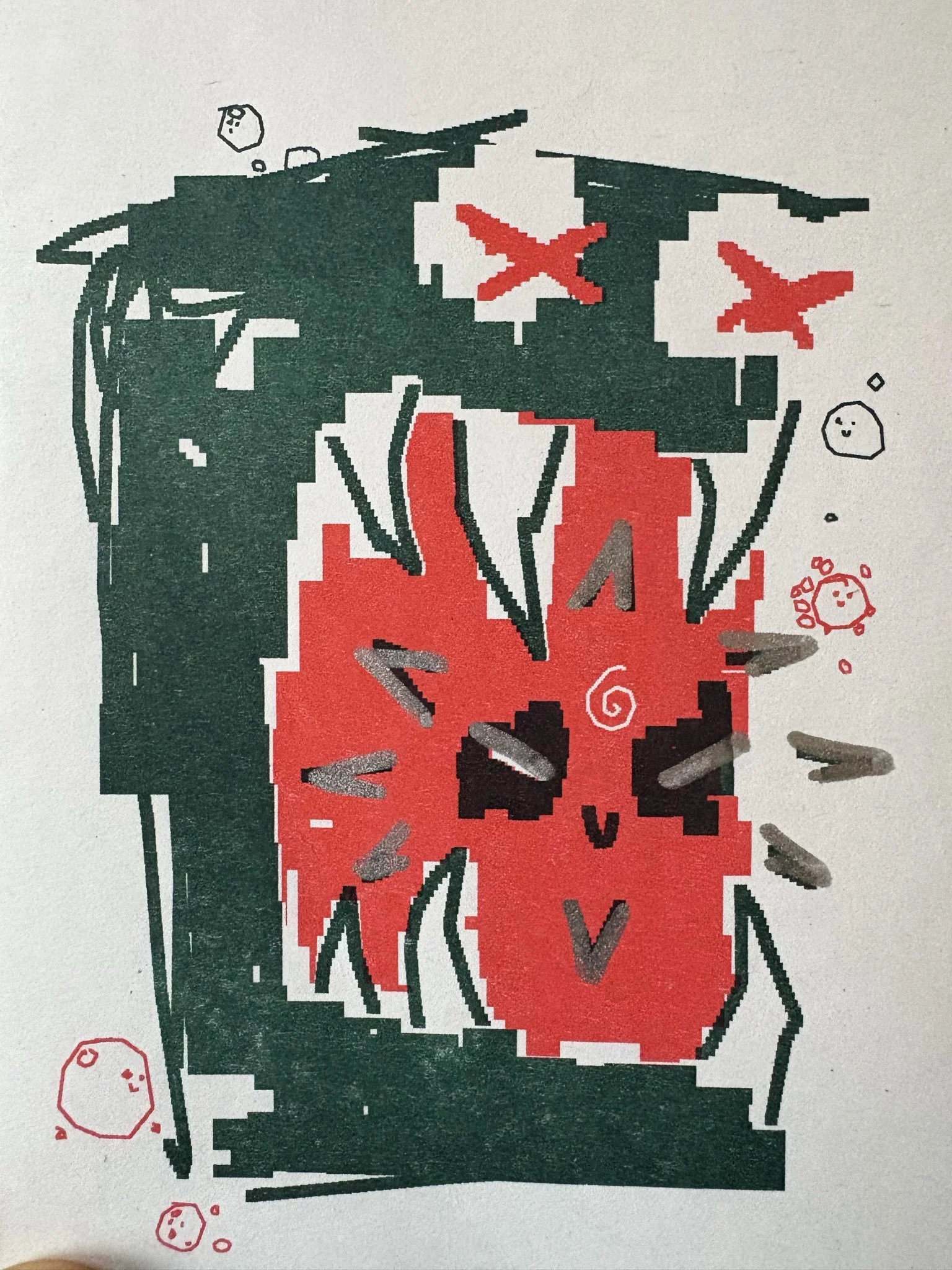

The satellite exhibition of Tech Won’t Save Us, Vienna, Austria. Organized by The Doomed Dao members @nessnisla.eth, @_mp9x

If New York renders XCOPY a cultural artifact, Vienna treats him as a transmission.

As a satellite exhibition of Tech Won’t Save Us in Vienna, the works are installed in a basement, where screens flicker against concrete walls indifferent to institutional decorum. Nothing in the space instructs the viewer on how to behave or what to think. Meaning is not ‘curated’; it is encountered.

Here, framing bias weakens. Without cues that signal “high art,” viewers generate interpretation more freely. A screen underground triggers adjustment rather than analysis. The work feels discovered, not presented.

In this environment, the work aligns closely with the visual language and cultural conditions from which it originally emerged. The absence of polish and institutional cues allows the work’s instability, discomfort, and refusal to surface more directly.

Responses vary. Some experience nostalgia - echoes of early internet culture. Others sense refusal-art that won’t resolve into comfort. Some dismiss it entirely as “screens in a basement.”

The file, of course, is unchanged.

Only the context shifts - and with it, the meaning.

Art Basel Miami: Expectation, Misperception, and the Laundromat

Art Basel Miami introduces the most acute perceptual conflict: XCOPY’s Coin Laundry, where more than 2.3 million free NFTs were claimed, all but one designed to self-destruct over the next ten years. Coin Laundry was conceived specifically for this scale and setting, where mass participation, speed, and disappearance are not side effects but core elements of the work.

Within Web3, the work was largely read as critique—a dismantling of value stability, liquidity theater, and the fetishization of ownership. Within the traditional art world, it appeared to confirm long-held suspicions: abundance without scarcity, spectacle without substance, volatility masquerading as meaning.

This is confirmation bias at work.

XCOPY, Coin Laundry, 2025, presented by Nguyen Wahed at Art Basel Miami.

People interpret information in ways that reinforce what they already believe. XCOPY dissolves value systems; critics perceive disposability. Their interpretation is not wrong - it is partial. They are not, as many claim, ‘missing the point’; they are encountering it through a familiar psychological schema.

Scale does not distort meaning; it accelerates it. When a work reaches millions, interpretation compresses, and familiar expectations assert themselves more quickly. The intention remains intact, but the conditions of reception shift.

Learning How to See — and How to Connect

The challenge is not how to show XCOPY’s work.

It is how to connect it to audiences who are not already native to its language - who do not come from crypto, blockchain, or online network cultures.

XCOPY’s practice was never built to explain itself to everyone at once. It emerged from specific conditions: digital scarcity, speculative economies, on-chain identity, and communities that understood value as volatile, temporary, and often performative. When that work enters broader cultural spaces, it encounters viewers who lack not intelligence, but context.

Perspective matters.

Expectation matters.

Experience matters.

Meaning does not fail here - it collides.

XCOPY’s work operates as conceptual art, but its concepts are encoded in network logic rather than art-historical language. For those outside that logic, the signal can register as noise. This does not invalidate the work; it reveals the gap between worlds that are now colliding.

XCOPY, the fuck you looking at?, 2020.

So the question becomes: can we learn to encounter the work without demanding immediate comprehension?

Children do this naturally.

They do not need fluency in systems to respond, they are moved by intuition. They experience first. They remain open to confusion. They allow meaning to form over time rather than insisting on resolution.

Perhaps this is the mode of viewing XCOPY requires - not expertise, but suspension. A willingness to step back from expectation and let the work exist before asking it to perform.

If so, XCOPY’s practice is not about cleanly bridging worlds or translating itself into familiar terms. It exists in advance of mass understanding - inhabiting networked futures that will inevitably spill into physical reality, but never on stable terms.

The work does not ask to be celebrated.

It does not ask to be agreed with.

It asks only to exist long enough to be encountered- before perspective, belief, and expectation decide what it is allowed to mean.

Yet gathering, curating, narrating, canonizing - these are institutional actions no matter who performs them. Decentralization dissolves authority only for it to quietly reassemble elsewhere, often inside a Discord server.

The Doomed DAO does not resolve this tension; it holds it. It attempts to preserve entropy where systems naturally seek permanence. XCOPY never promised stability, longevity, or preservation.

A noble contradiction. In other words -

Very XCOPY ;)

xxx

Disclaimer

This text does not claim to define XCOPY’s intentions. The aim of this article is not to explain XCOPY, but to examine how meaning shifts through context, framing, and viewer perception as the work moves across platforms, spaces, and audiences. What is described here is not what the work is, but how it is seen.

Credits & Congrats

Strong work by all who shaped XCOPY’s work across contexts and cities:

New York — @SuperRare, @_MP9X and the whole @TheDoomedDAO (Tech Won’t Save Us)

Vienna — the satellite organizers who embraced friction and let the work function as signal, not object

Art Basel Miami — @artbasel @zero10art (Coin Laundry)

Melbourne & beyond — independent curators and local teams continuing to test digital art in the wild.

Well done to everyone involved.

Physical card, signed by XCOPY, distributed at his solo exhibition Tech Won’t Save Us at Offline Gallery, New York.

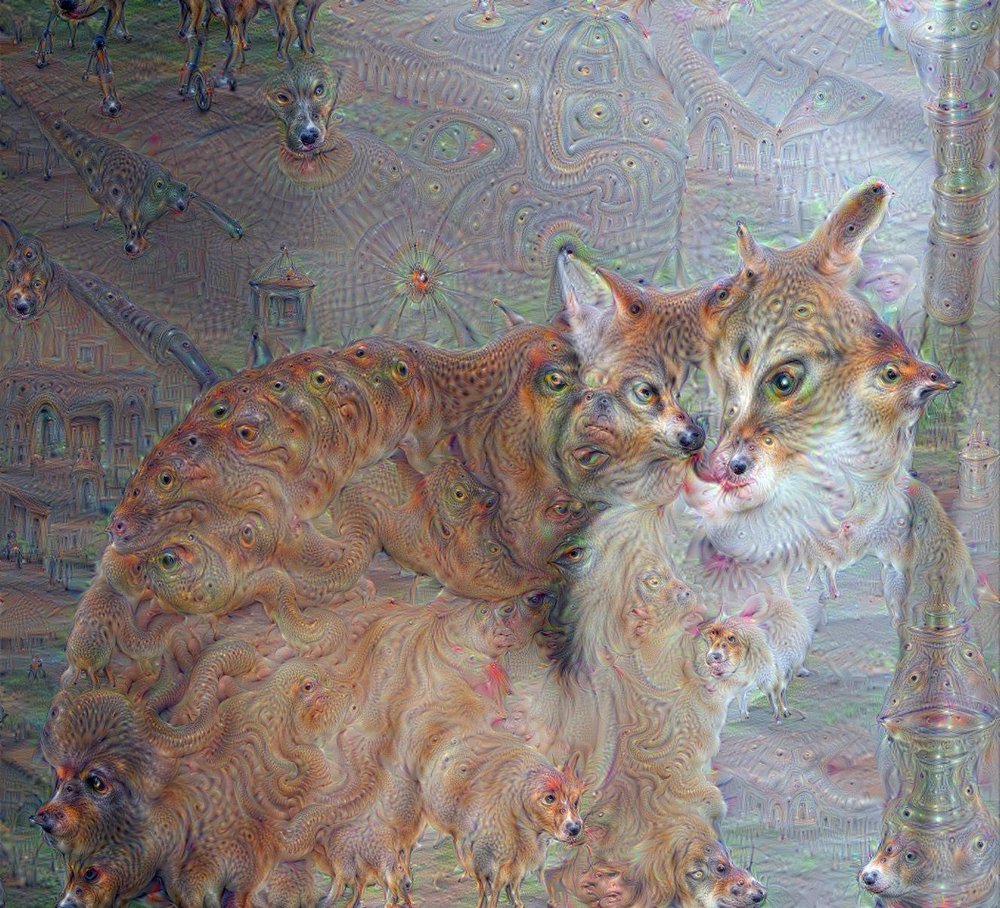

★attention economy: Beeple’s Regular Animals — Who’s a Good Dog? Whoever Gets the Most Attention.

A deep dive into how attention—not art—creates value in Web3. Featuring a case study on Beeple’s Regular Animals and the psychology behind engineered virality.

Attention is a strange currency. The more information we receive, the less we can hold. Psychologists warned this decades ago: overstimulation erodes focus; cognition collapses under overload. Yet we keep reacting — faster, louder, more impulsively.

My last post made this painfully clear.

It generated discussion, outrage, agreement, confusion — everything except one thing: very few people actually read the full article.

Without time, slow reception, or attention to detail, no one can form a real opinion or offer meaningful critique. And that, precisely, is the point.

The most valuable currency today isn’t gold, oil, or even Bitcoin. It’s attention — the invisible architecture determining what becomes visible, valuable, celebrated, or quietly erased.

René Magritte, The False Mirror, 1928. A literal diagram of the modern internet: the eye doesn't see reality; it reflects the system back at itself. Image source: moma.org

In a world drowning in information and starved of cognition, attention is not just scarce — it is weaponized.

Psychologists warned that attention collapses under overload. Economists warned that scarcity creates markets. Today, culture forms inside the two.

Whoever shapes attention shapes narrative. Whoever shapes narrative shapes the future.

Open X and within seconds you’re pulled into a storyline you never chose — not an artwork, not an idea, but a frame engineered to feel organic.

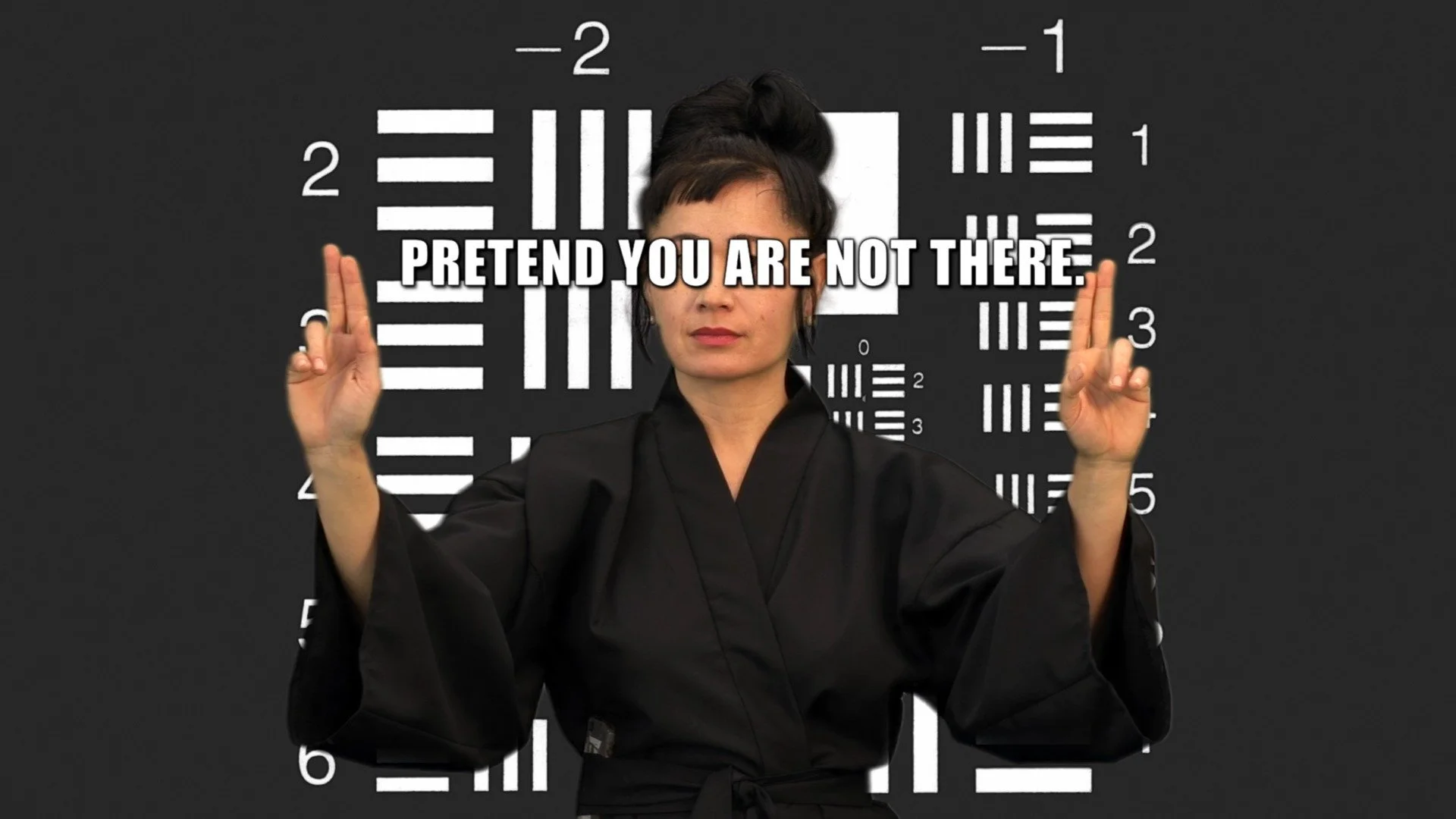

Hito Steyerl, How Not to Be Seen, 2013. A manual for surviving the visual regime. Steyerl shows what most people still refuse to admit: images aren't just pictures — they're systems of power. They compress social forces into a single frame. Image source: moma.org

Most people don’t form opinions independently — not from lack of intelligence, but because independent judgment requires three things modern culture erodes: time, context, resistance.

It’s easier to mirror what appears “liked” than risk thinking differently. And when someone diverges, the crowd rushes in to “correct” them — social pressure disguised as consensus.

Behavioral psychology has documented this for decades: when uncertainty is high, humans outsource judgment to the group. Digital art, still lacking stable institutions or literacy, amplifies this effect.

Those who command or manufacture attention now determine more than price. They determine cultural reality itself — what is seen, forgotten, declared important, or omitted entirely.

Abundance vs. the Limits of the Mind

Herbert Simon captured our era perfectly: “A wealth of information creates a poverty of attention.”

Information can scale infinitely. The mind cannot.

Pak, The Merge, 2021. Pak's highest-selling NFT, generating $91.8 million. The work is one of the best expressions of how attention becomes value. The supply of the artwork increased as more people bought “mass” during the sale — meaning the artwork literally grew through attention. Image source: barrons.com

Behavioral economists call this the attention budget: a cognitive limit beyond which every additional input forces the brain to neglect something else. When information grows exponentially and attention does not, scarcity emerges — not of capital or objects, but of focus.

Where scarcity emerges, markets form. And in attention markets:

• what stands out overwhelms what matters

• visibility becomes shorthand for value

• velocity outruns understanding

Digital culture is frantic by design. Platforms scale speed, not meaning. Web3 simply added financialization.

When the system rewards immediacy, depth becomes a risk.

Attention as a Tradeable Asset

Nam June Paik, TV Buddha, 1974. Paik's recursive loop between observer and machine symbolizes a contemporary self-reinforcing cycle of attention: a recursive loop of watching ourselves watching ourselves. Image source: pbs.org

Metrics like likes, retweets, follower counts, wallet trackers, and “top sales” dashboards became market indicators → indicators became liquidity → liquidity became narrative.

A reflexive loop emerged:

Attention lifts prices.

Rising prices attract attention.

Attention endorses the narrative.

Narrative legitimizes value.